Google Home

with Gemini

Google Home's AI Assistant has been underperforming…

Smart homes could be smarter

with an assistant that understands you

as a human,

not as a command.

Hero inspired by apple.com/siri

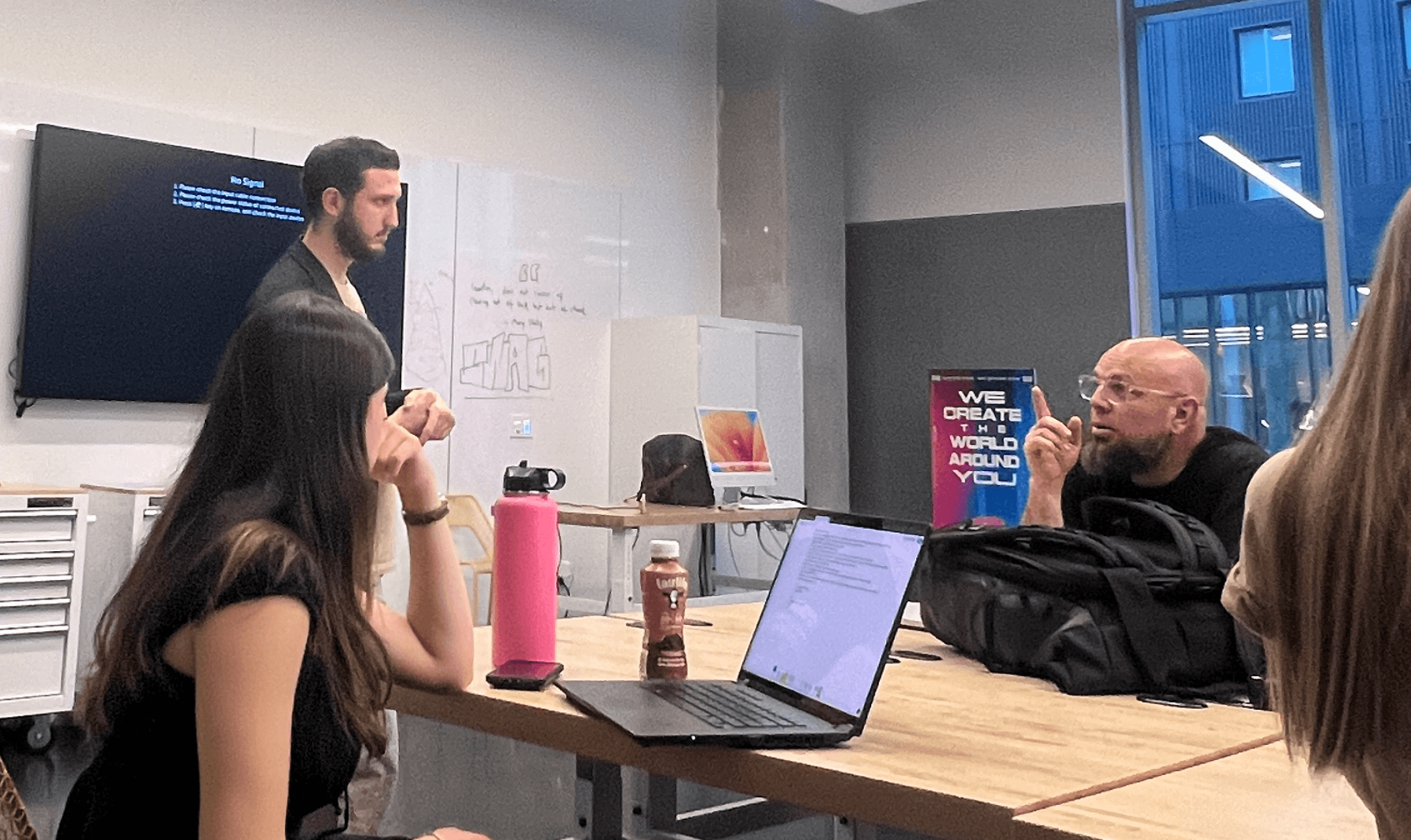

As part of SCAD's UX department partnership with Google, we were tasked with digging into Google Home to find problems and use industry-level UX research practices to see how it stacks up against the competition.

To produce an actionable outcome for Google, I led the design process and benchmarked my concept directly with the original Google Home. We collaborated with Google team members and by the end of the quarter, we delivered our actionable and proven insights through a presentation and client book.

Core Team

Darya Andreyeva, Nikita Rapoport, Raven Glister, Ilayda Ozcan, Kevin Esmael Liu, SCAD

No defined roles, but I played a crucial leading role in the project (100% peer review score, 97% class grade)

Google Mentors/Collaborators

Uthkarsh Seth - Sr. Staff UX Manager, Google

Additional Collaborators/Resources

Product Research Team @ State Farm

Lane Kinkade - UX Professor

Tools

Maze

ElevenLabs

Material 3 for mobile UI screens

Google VUI Design Guidelines

Timeline

9 weeks (Spring 2024)

Is Google Home really listening,

or just hearing us?

Problem

Google Home doesn’t proactively learn about its users. Instead, it relies on asking for users’ preferences, which they must manually input. This makes its value as an assistant entirely dependent on the user’s time and effort. So the challenge here is to see what Gemini can solve.

Google Home and its AI Assistant fall significantly short of user expectations, with 100% of our survey and user testing participants reporting high levels of dissatisfaction with its voice capabilities and overall interaction experience.

Major Issues

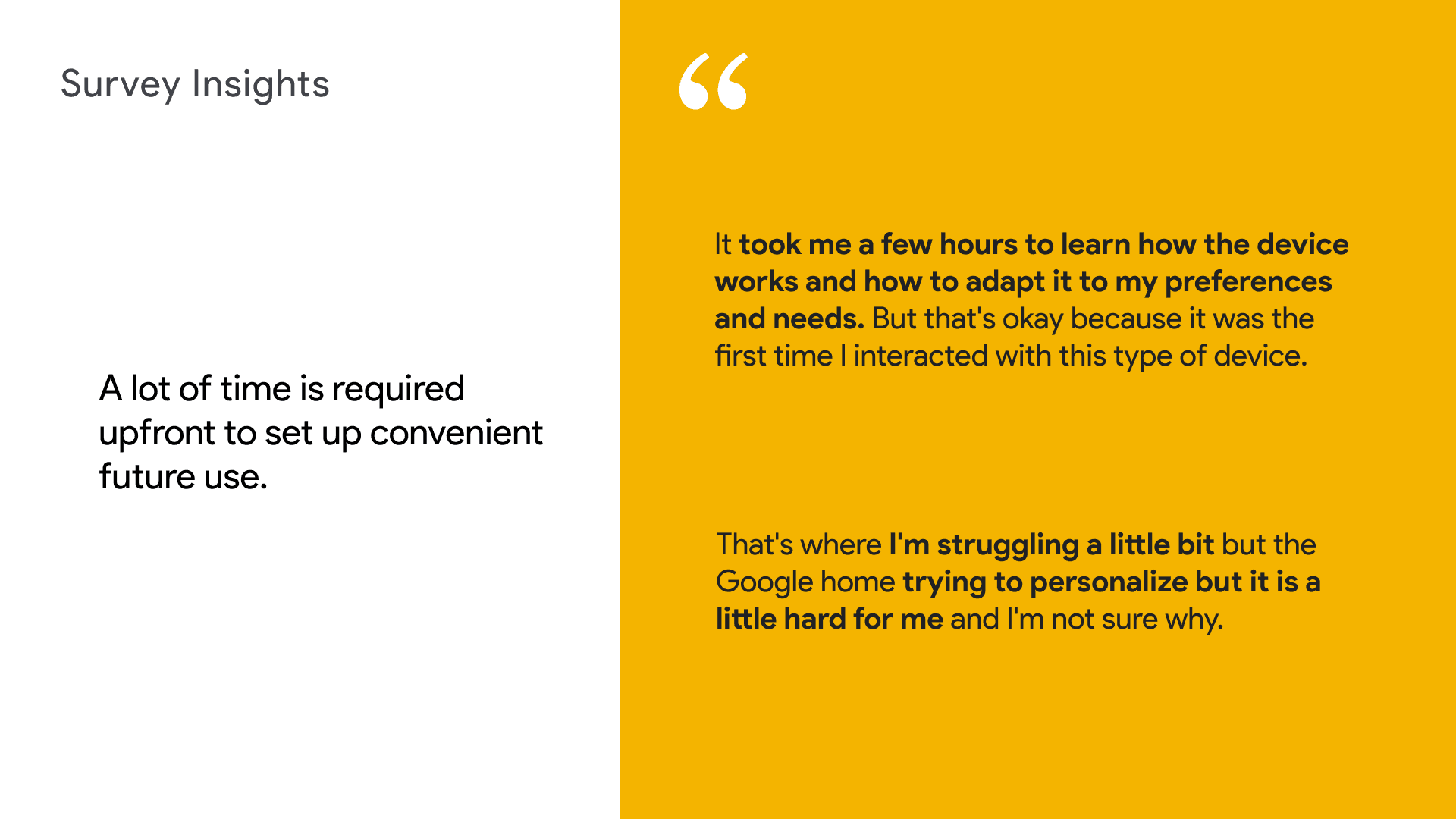

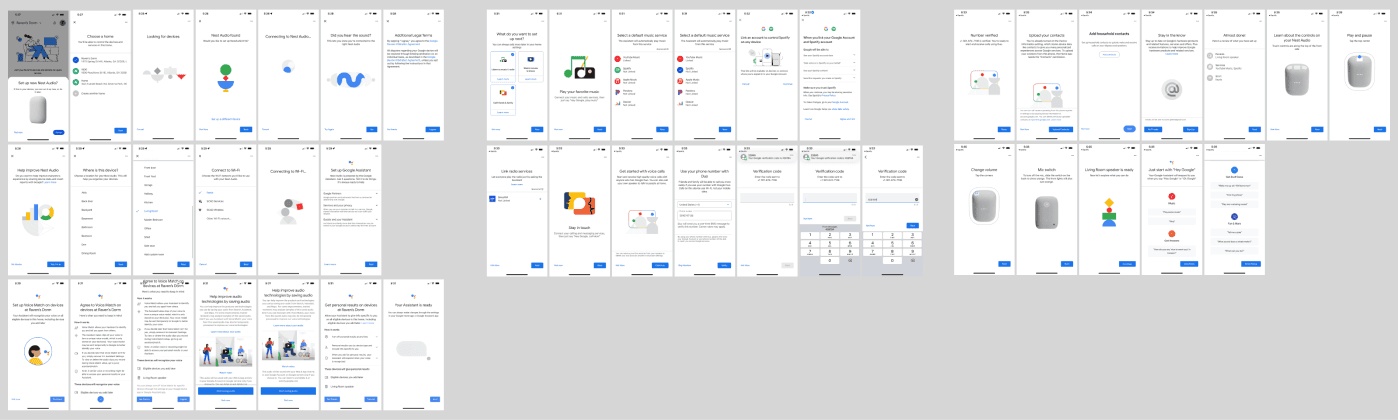

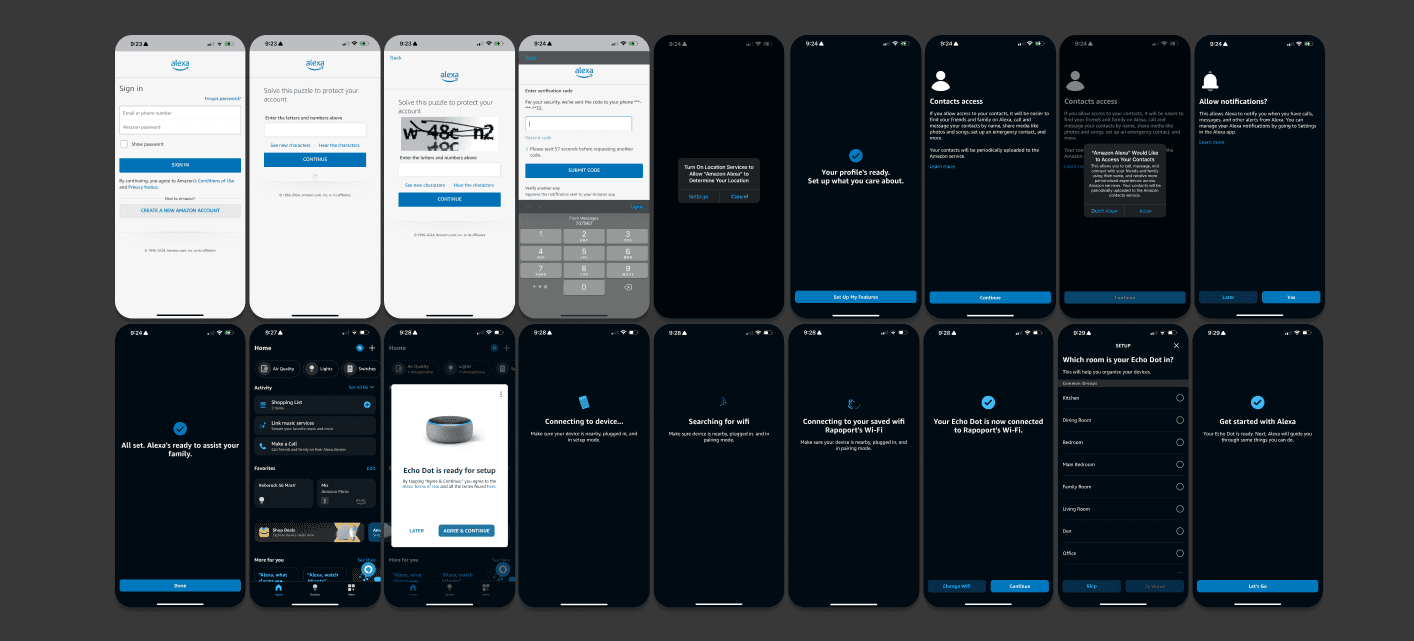

Onboarding is convoluted and lengthy

Users ended up spending more time troubleshooting than actually exploring what should be a new and intriguing product. This often led to frustration or abandonment of setting up features altogether.

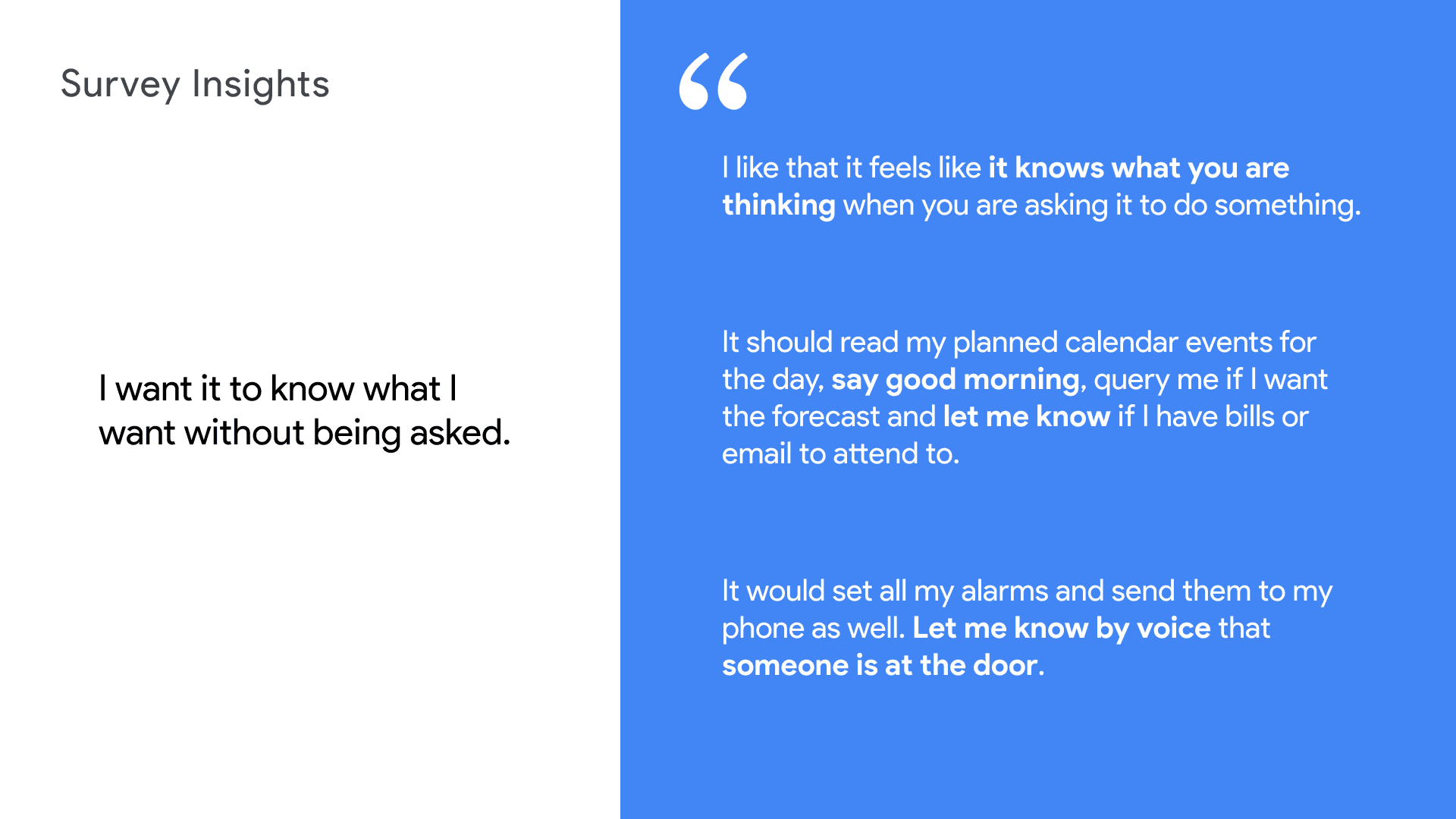

It doesn’t feel personalized, even after extensive setup

Despite users investing significant time into customizing their Google Home by providing their information, they rarely received anything helpful in return. It didn’t seem to ever utilize the data it was given.

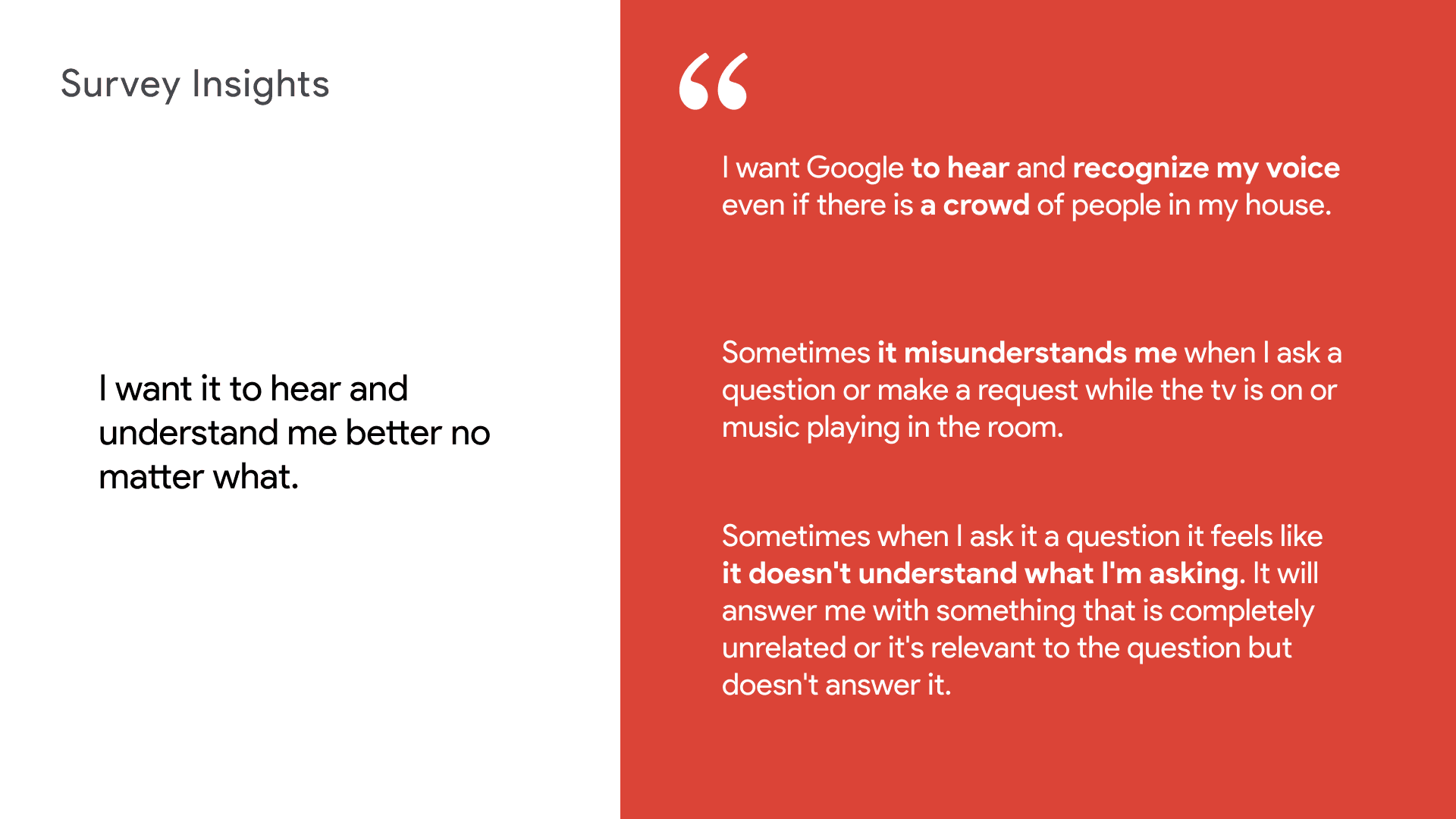

AI Voice Assistance is outdated and unreliable

Interacting with Google Assistant requires specific voice commands and lacks the contextual awareness that users expect today. Users don’t trust it to provide useful help beyond simple tasks they've grown accustomed to asking Google Home for, like setting an alarm, timer, or turning on a light.

As you can see, there's wayyyy too many screens in onboarding.

Onboarding usually requires 40-50 screens (not including adding other partnered devices or most personalization options) And on average, it took almost twice as long as users would initially expect.

Although Google Assistant (AI used in Home speakers) can respond to tasks and carry out actions, it does not understand human language as current users expect in comparison to modern competitors (think ChatGPT-4o)

Solution

After diving into 7 weeks of UX research, I designed a new Google Home prototype. With our tight timeline, my team didn’t have the bandwidth to tackle the design aspect, so I took the initiative to benchmark my proposed improvements against the original.

I made a real difference in usability and usefulness across several key areas.

This prototype came together in just 1 week. It’s not perfect, but it’s a solid glimpse into what the future of AI assistants could look like.

I validated my prototype with an increased SUS score from grade F to A (40.0 to 80.0), and an estimated +80% CSAT.

Interactions that are Actually Enjoyable

By leveraging Gemini, we’ve improved the overall quality of conversations, making Google Home's assistant truly worth asking for help. Previously, users found that interacting with Assistant wasn’t fun or particularly useful, but now, it’s a game-changer.

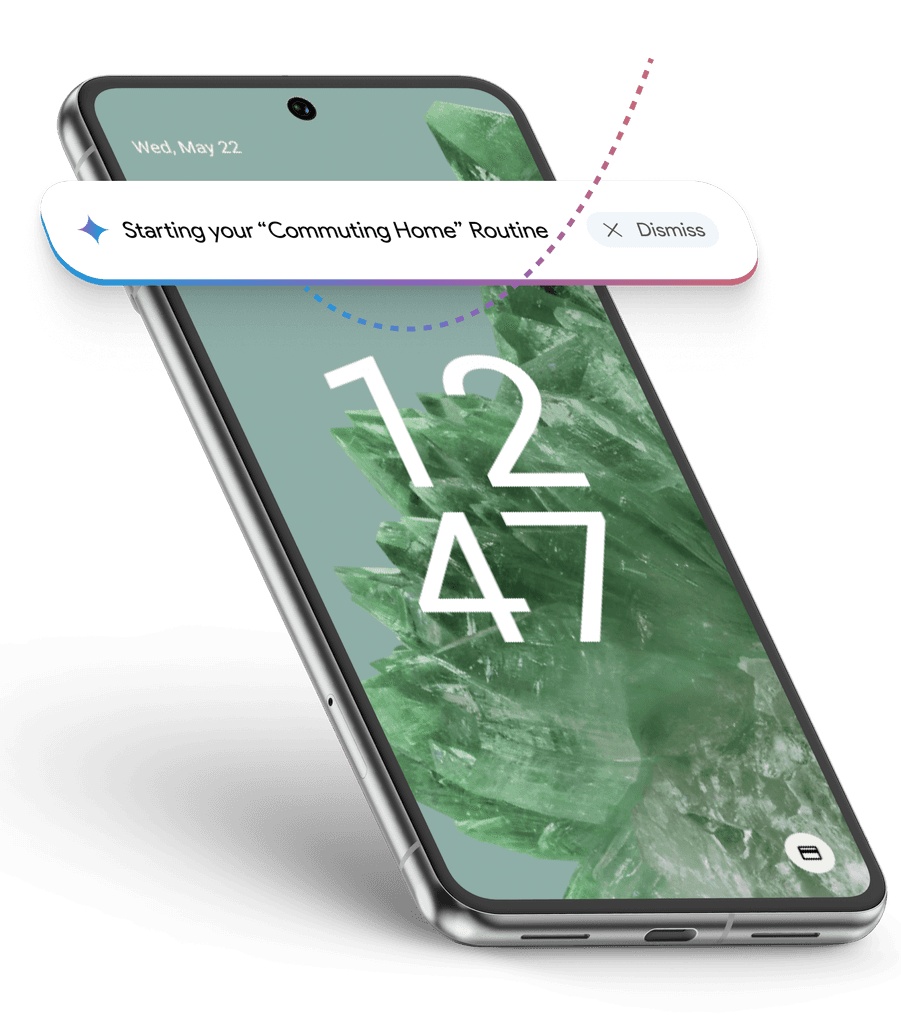

An Assistant that Learns and Anticipates

One pain point we identified was that Google Assistant has always been reactive to commands, rather than proactive. Users expected that after living with their device for many years, it would progressively get smarter, which isn’t the case. To address this, we made Google Home smarter by having it recall collected information and proactively make suggestions based on user habits and lifestyles.

Priming the User for Voice Interaction

(Before vs After)

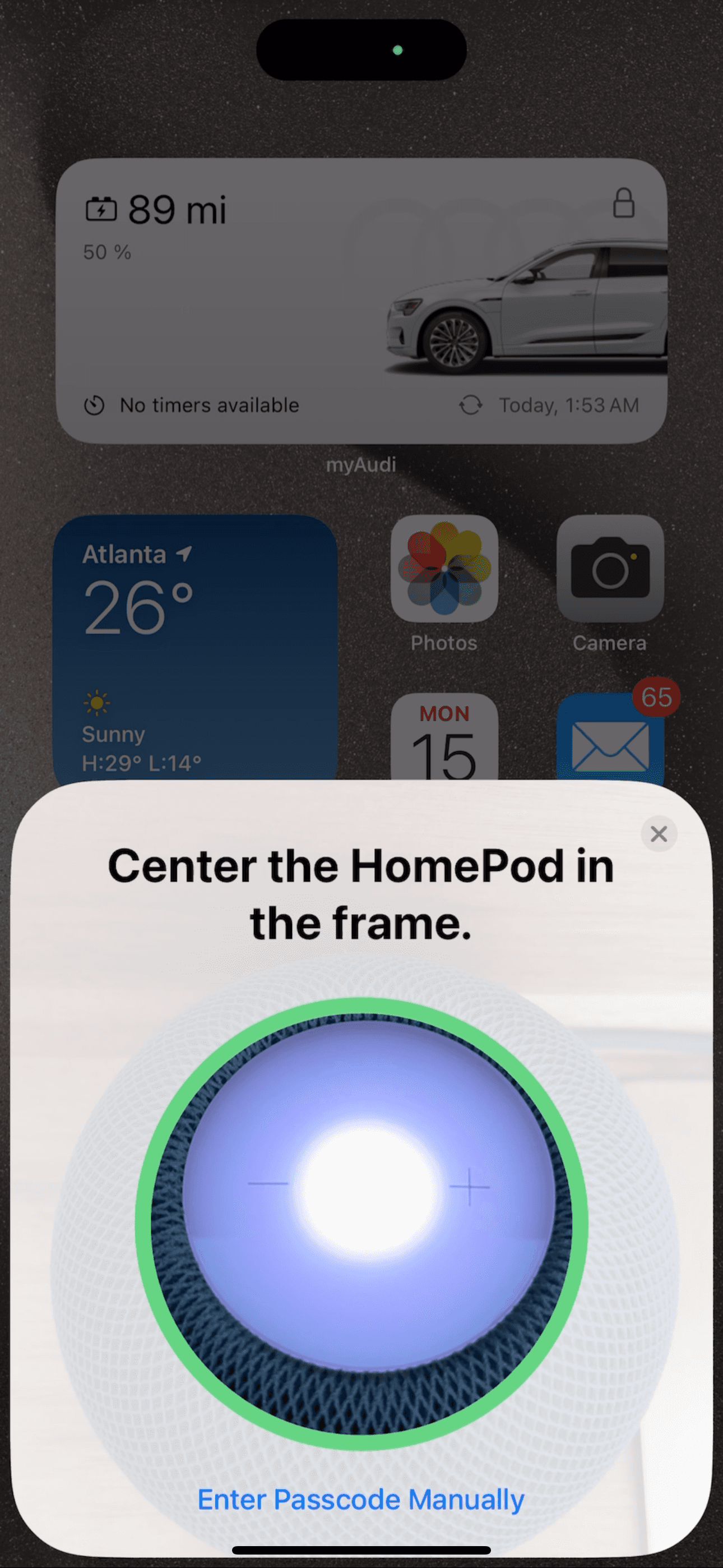

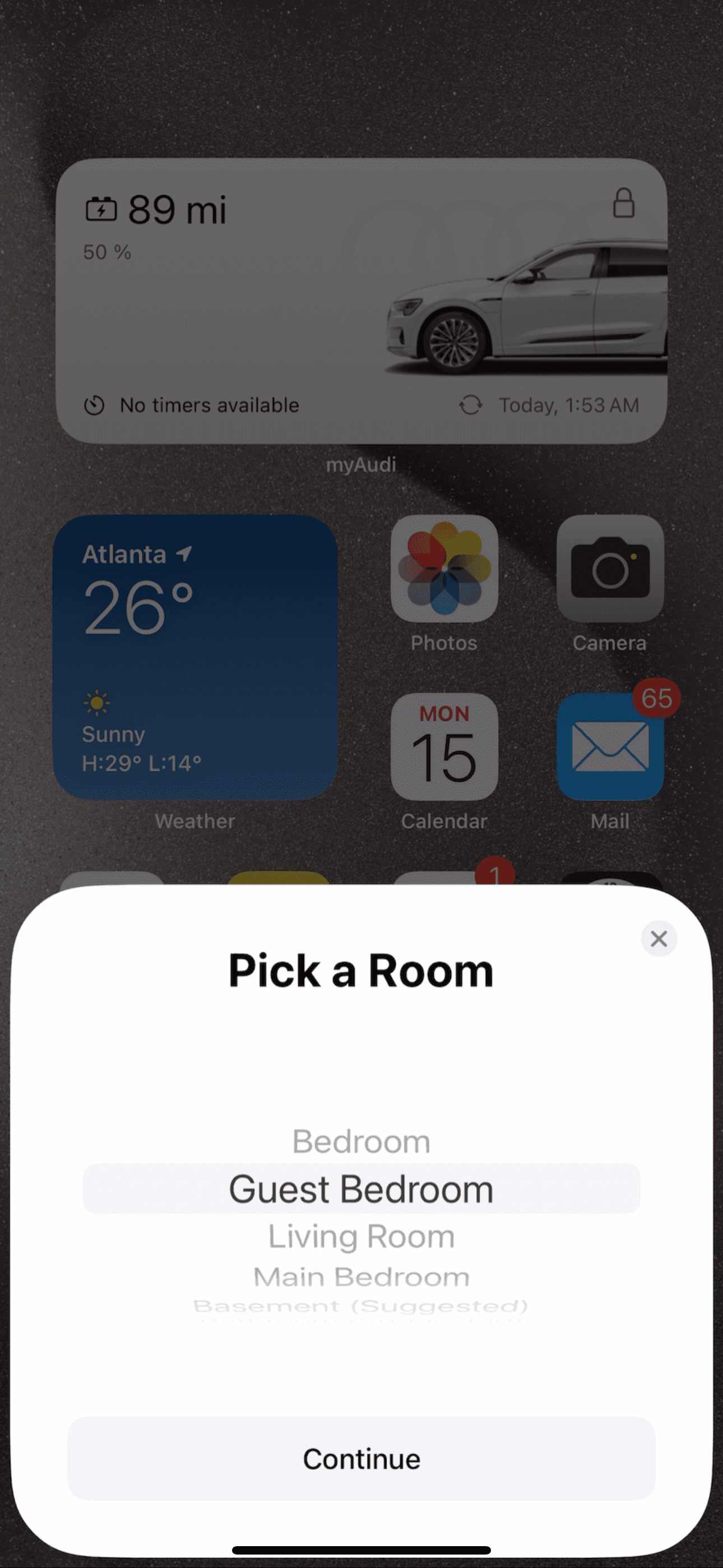

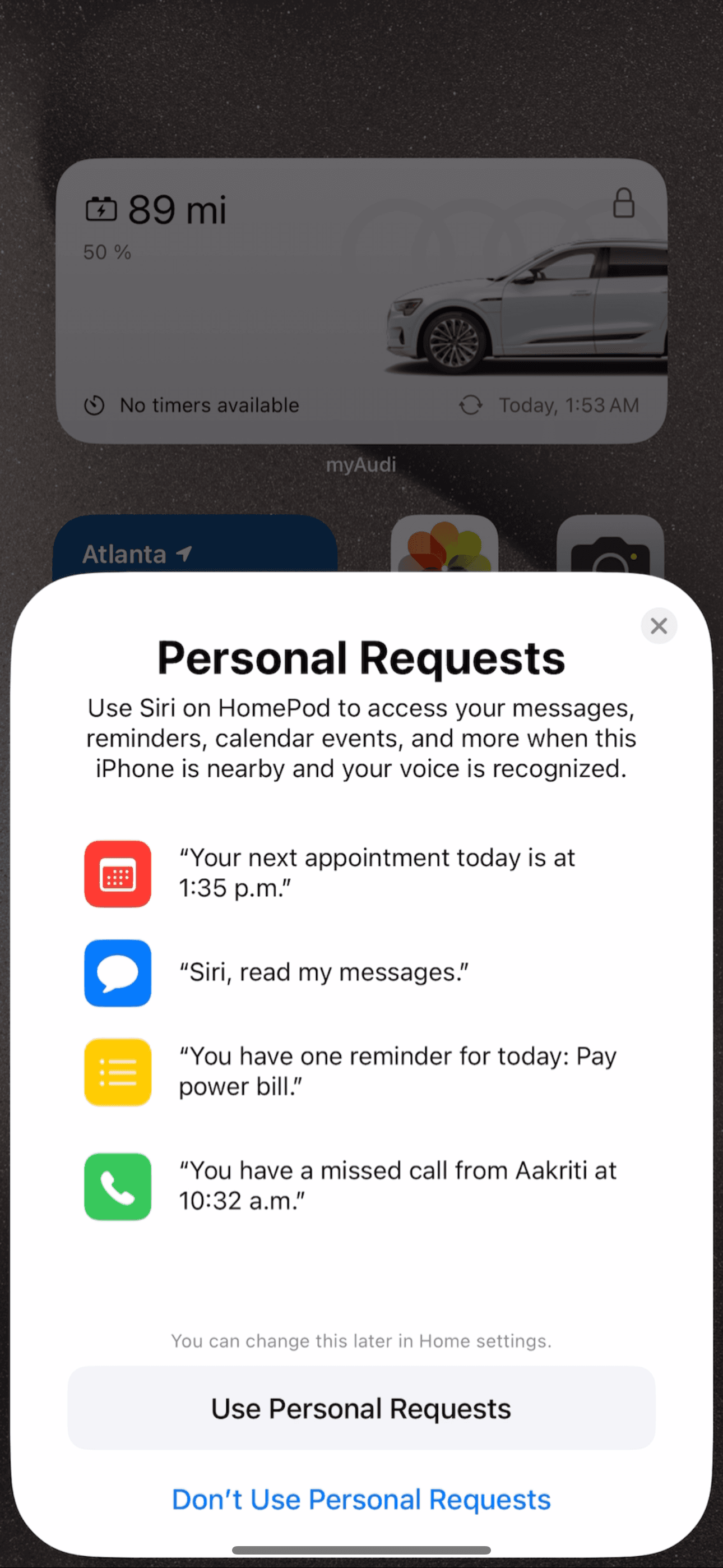

I redesigned Google Home’s onboarding prompts to prioritize getting users comfortable with talking to the speaker more often, reserving mobile phone interactions for more complicated tasks like sharing Wi-Fi credentials or terms and conditions.

An Assistant that's Contextually Aware

The bottom line is that humans don't talk like robots. In order to be helpful, an intelligent assistant should be able to put two and two together. Ex: Office = A place to work.

But it's not just about Google Home. I explored how Gemini should empower users in various contexts.

Let me show you a snapshot into this new world with Gemini.

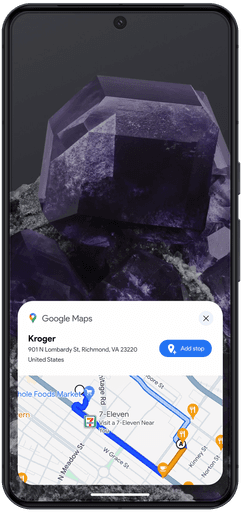

How good is an assistant if it quits on you the moment you step out the door?

"Smart Home" turned

Smart Living

An assistant that continues to be helpful even when you're out of the house.

Hey Gemini,

What should I cook tonight?

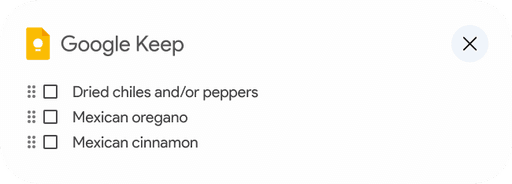

Hey Gem, I need to make a stop for groceries on the way home today.

Can you also make a list of the ingredients I still need?

My Design Principle

Change User Perceptions With Human Touch

Fixing the existing usability issues with the Google Home app UI and speaker VUI would have been a step in the right direction. But, as our user research showed, most of Google Home's value was still flying under the radar. Usable doesn’t always mean useful. Enter Google Gemini – the perfect chance to rebrand and leapfrog ahead of both direct competitors (looking at you, Amazon Alexa) and indirect ones (Siri, ChatGPT, etc.).

The game-changer? Creating a proactive, learning assistant. Think of it as moving closer to having a true human assistant that gets you and anticipates your needs. It’s not just smart; it’s intuitive, and that’s the future we’re aiming for.

Users were frustrated by the underutilization of their data. Google Gemini was a strategic opportunity to rebrand Google Home into a proactive, learning assistant that truly understands and anticipates user needs, transforming it into a leader in the smart assistant space.

Discovery

During our discovery phase, we aimed to get a broad view of the smart speaker competitive landscape. Each team member conducted individual diary studies on multiple devices to capture our initial impressions and observations while setting up and living with the devices for a few days.

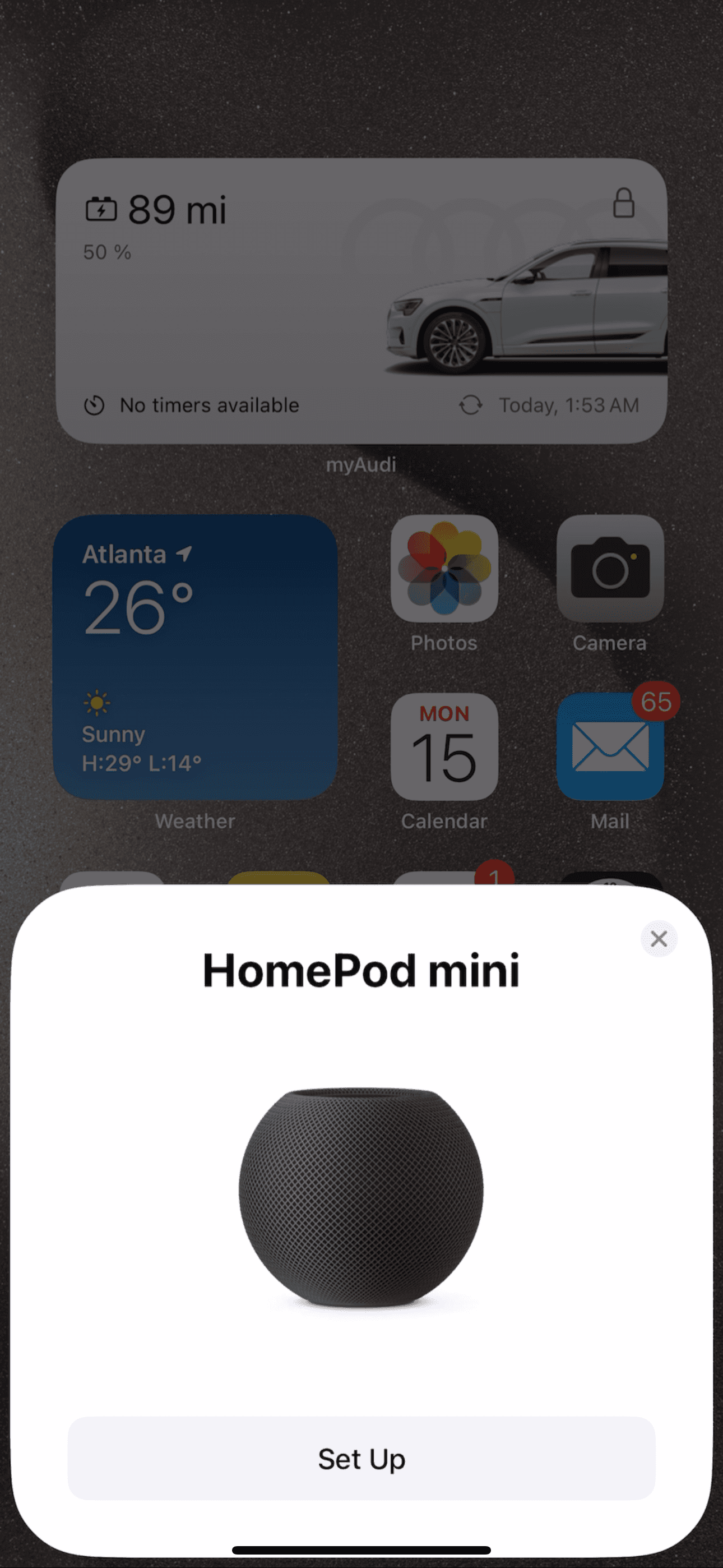

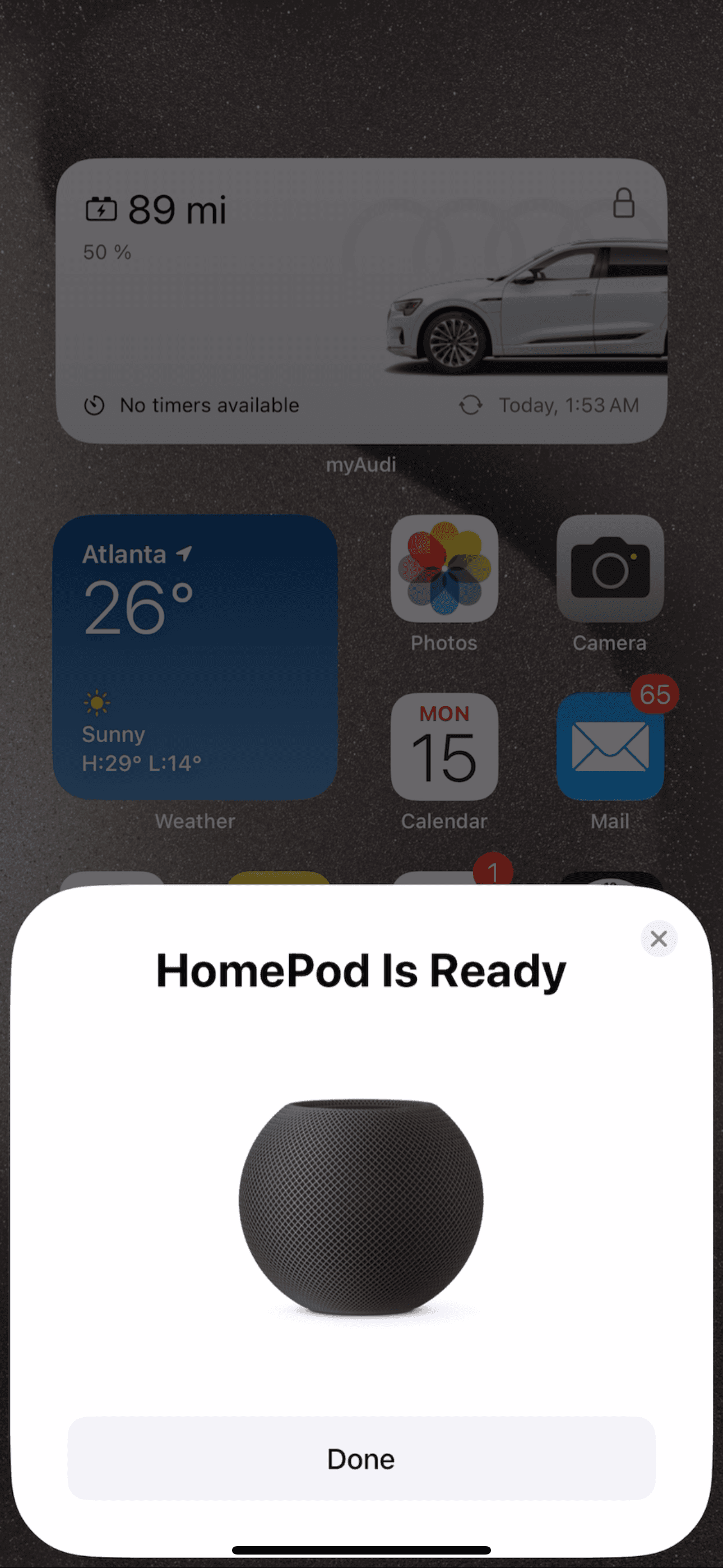

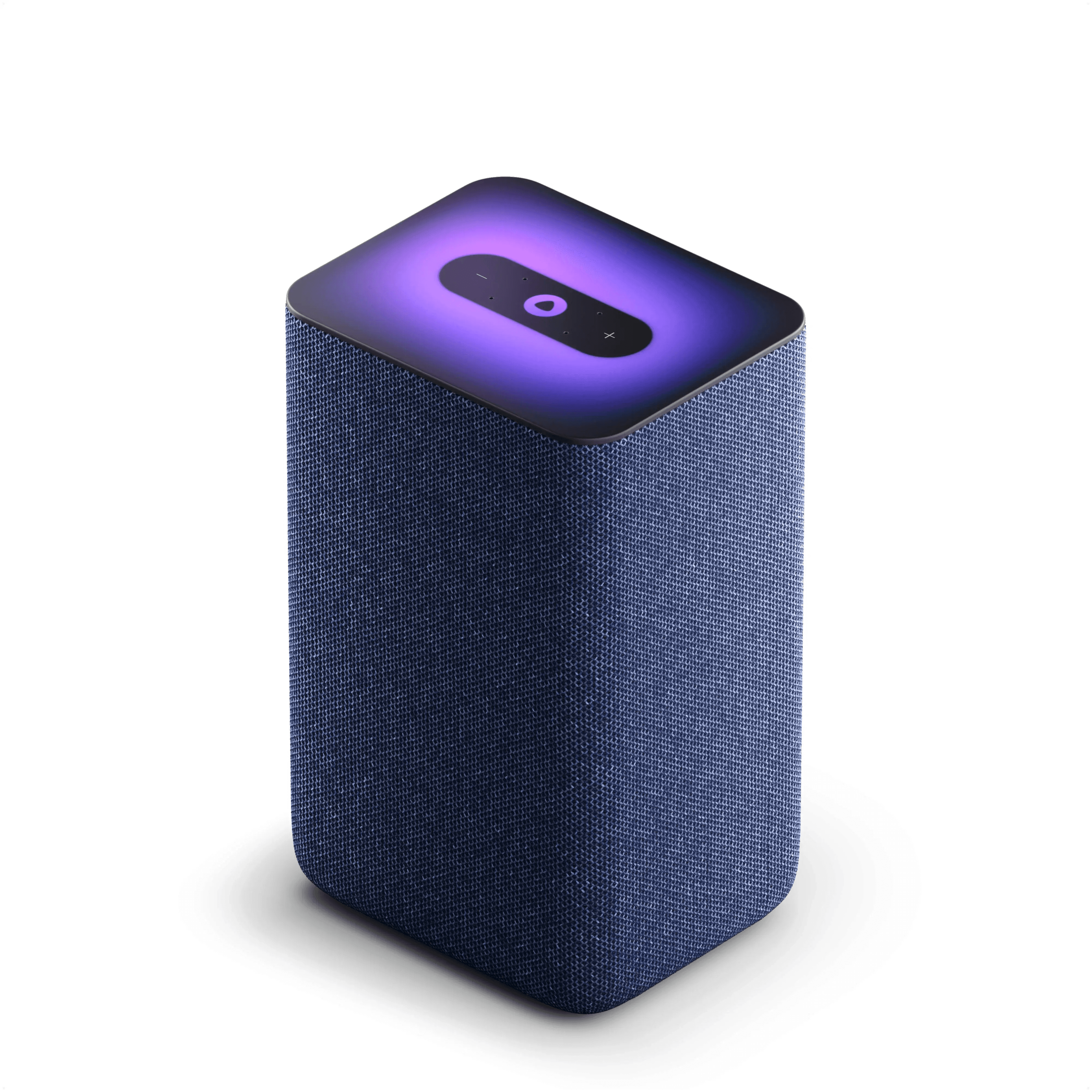

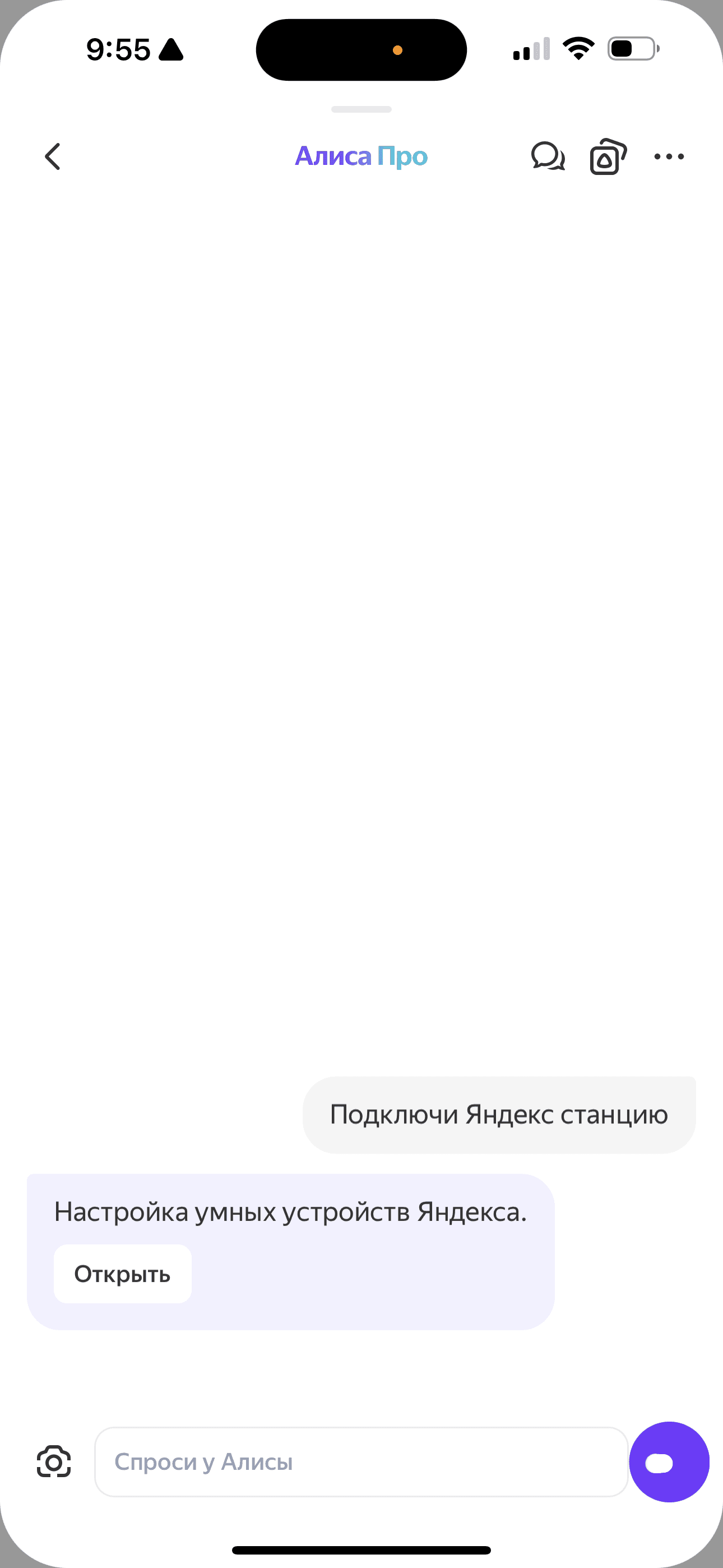

Our process began with a high-level overview of various competitors, highlighting Apple HomePod (with Siri) and Yandex Station (with Alice) as having the best onboarding experiences.

Unlike Google Home, Amazon Alexa takes a different approach to personalization. They don't frontload any of it into onboarding.

Apple HomePod had one of the most seamless onboarding experiences that took under 60 seconds (except when it DIDN'T work, in which case I had to wait 25 minutes for it to try and sync my data onto the speaker).

Automatically Executes Request

Alice, connect to Yandex Station

Conversation Log

Alice, connect to Yandex Station

In our secondary research, we explored international markets as well. We acquired a Yandex Station from Russia, which outperformed all other competitors. Once you asked, it handled the entire setup process for you. Alice, its AI assistant, was noticeably more conversational than any of the other competitors.

Recruiting Participants

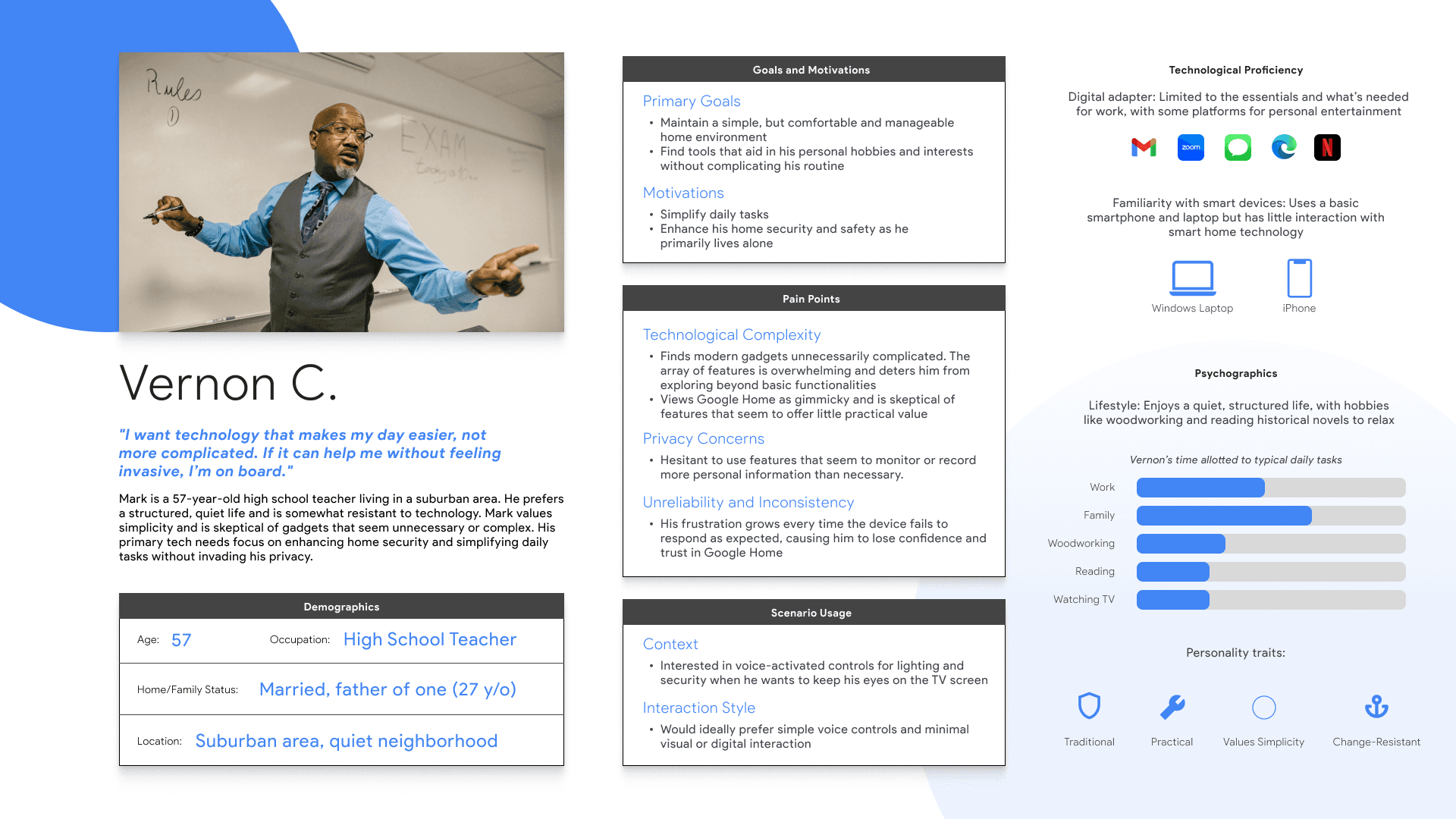

One of our first challenges was figuring out who Google’s designers had in mind for Google Home. But then it hit me: we didn’t need to know how it worked for their ideal user. What we really needed to know was where it fell short, especially for folks who aren’t tech-savvy, or even tech-agnostic. By focusing on the pain points for these users, we could uncover hidden usability issues and make improvements that benefit everyone, no matter their tech expertise or behavior.

I hypothesized that if we zeroed in on the tech-agnostic and less tech-savvy users and tackled their problems, we could solve issues for everyone else, including Google's ideal customers.

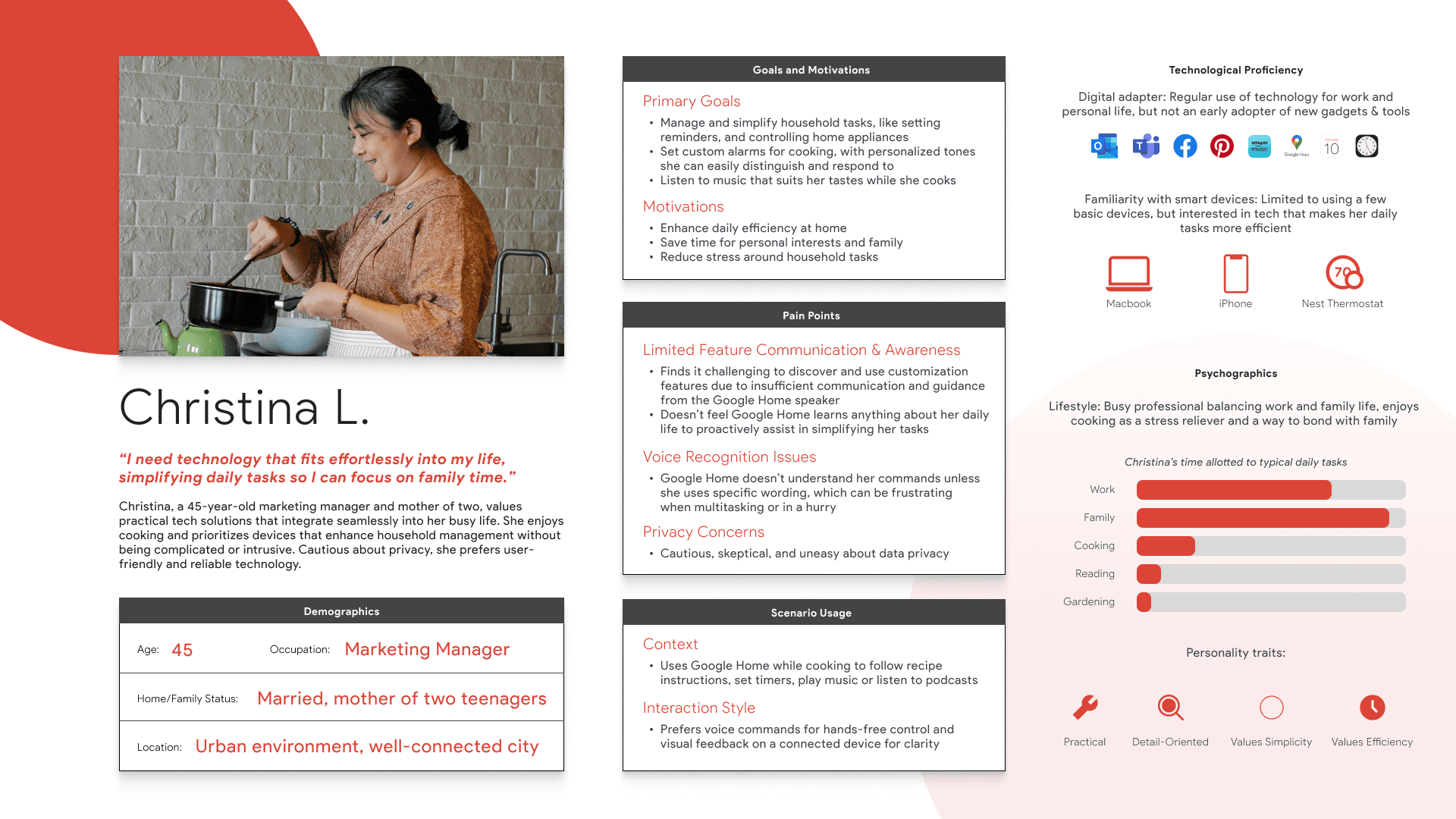

Personas for Screeners

The team was initially conflicted about which participants to recruit. I aligned everyone by developing personas that defined our ideal testing participants. This approach also helped me communicate our recruitment strategy to Google, who I treated as a key stakeholder.

(Adapted for presentation purposes, originally just done in FigJam and talked about.)

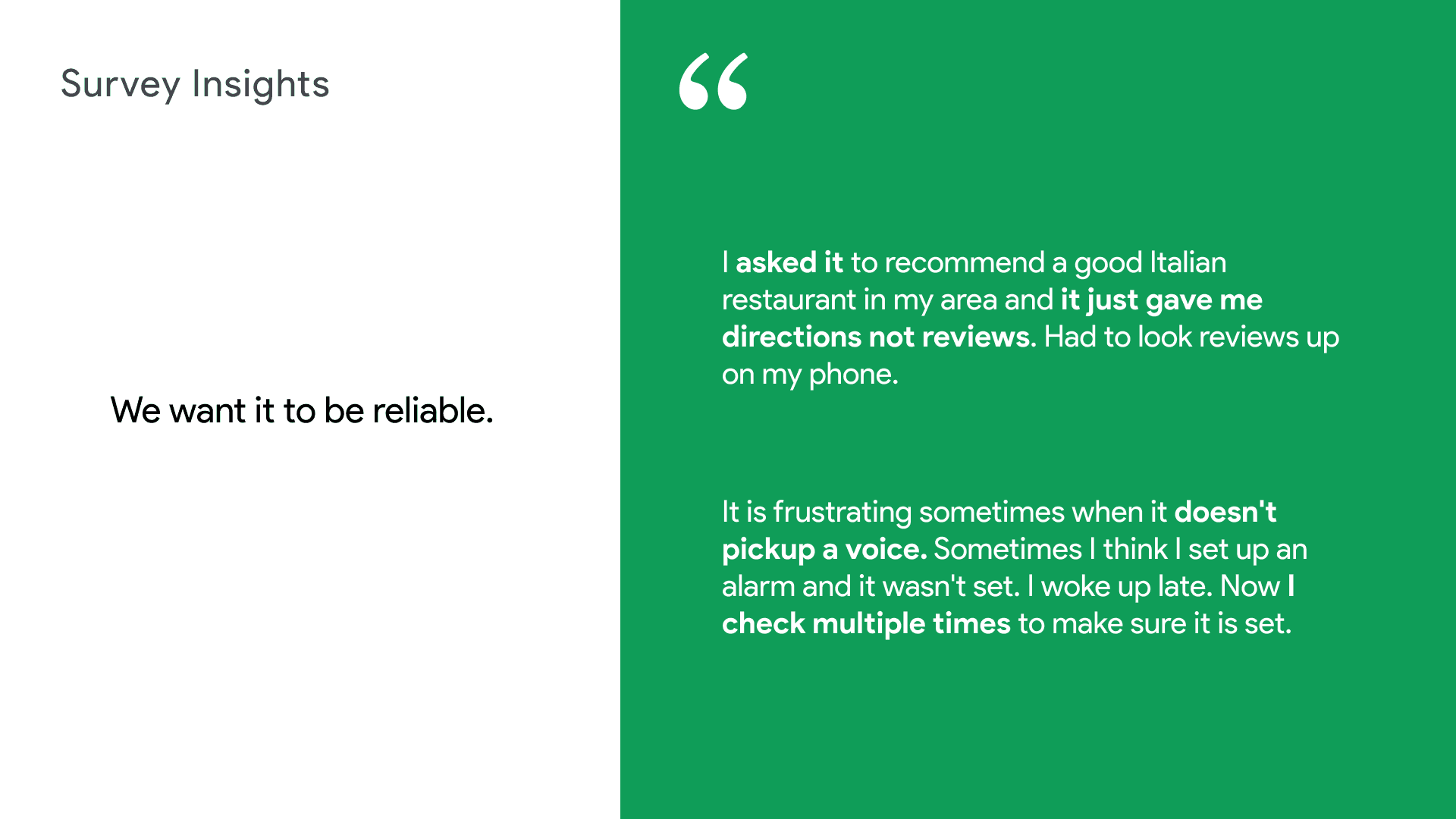

Surveying

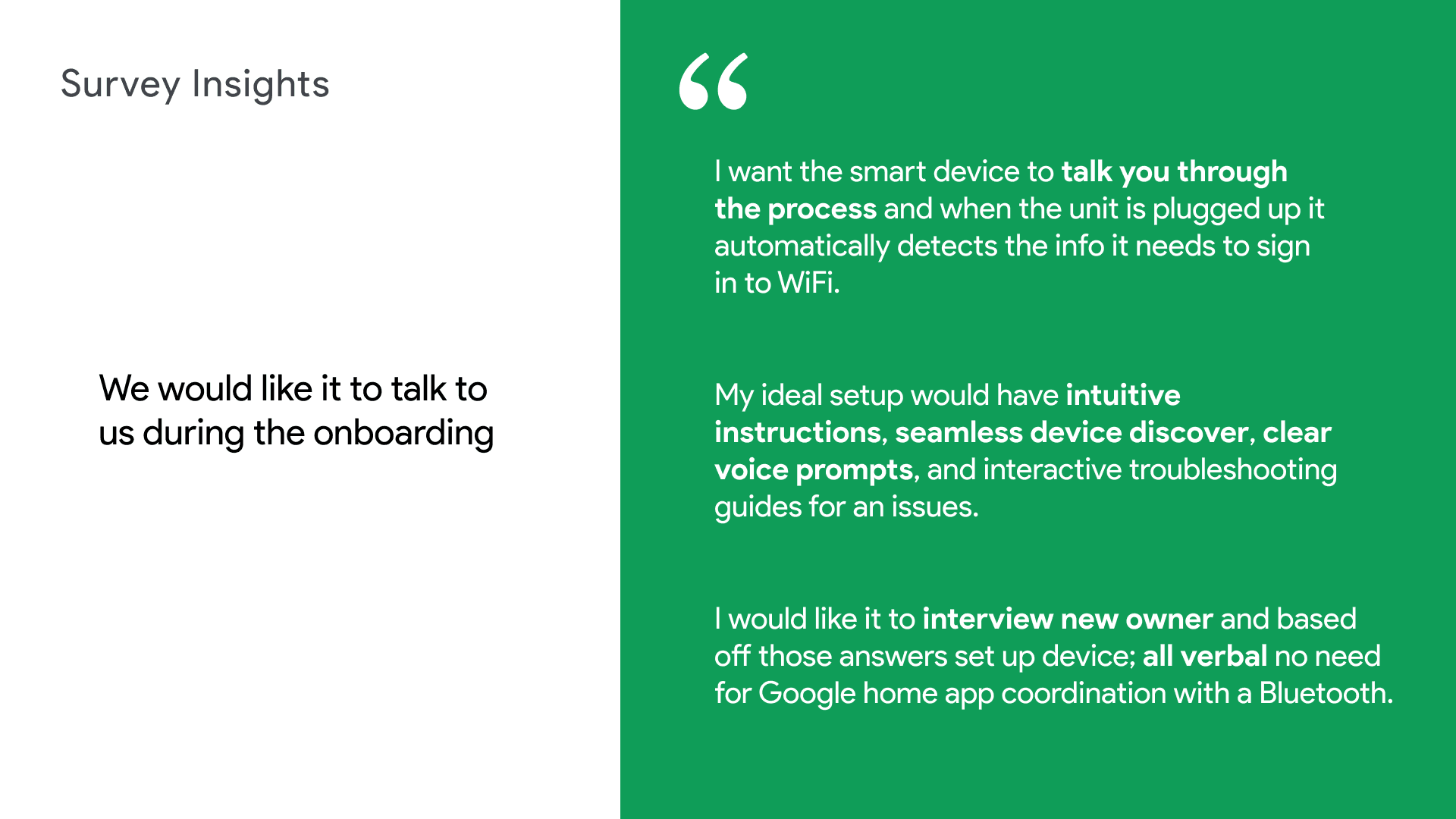

To figure out our usability testing plans, we first needed to nail down what to benchmark and test for.

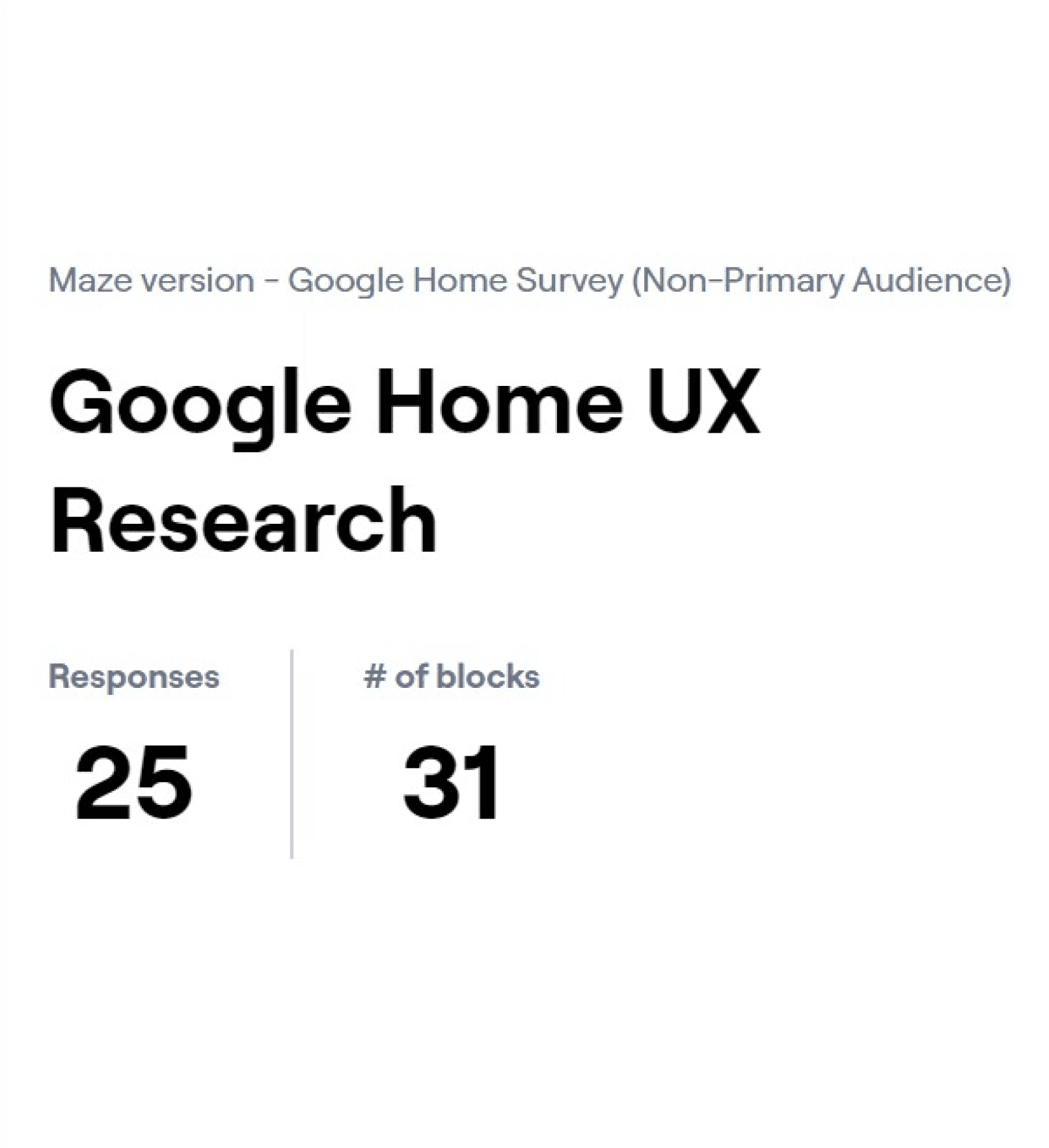

With the tight deadline, I knew we had to leverage unmoderated surveys as much as possible. We used Maze to survey 25 people who passed our screener.

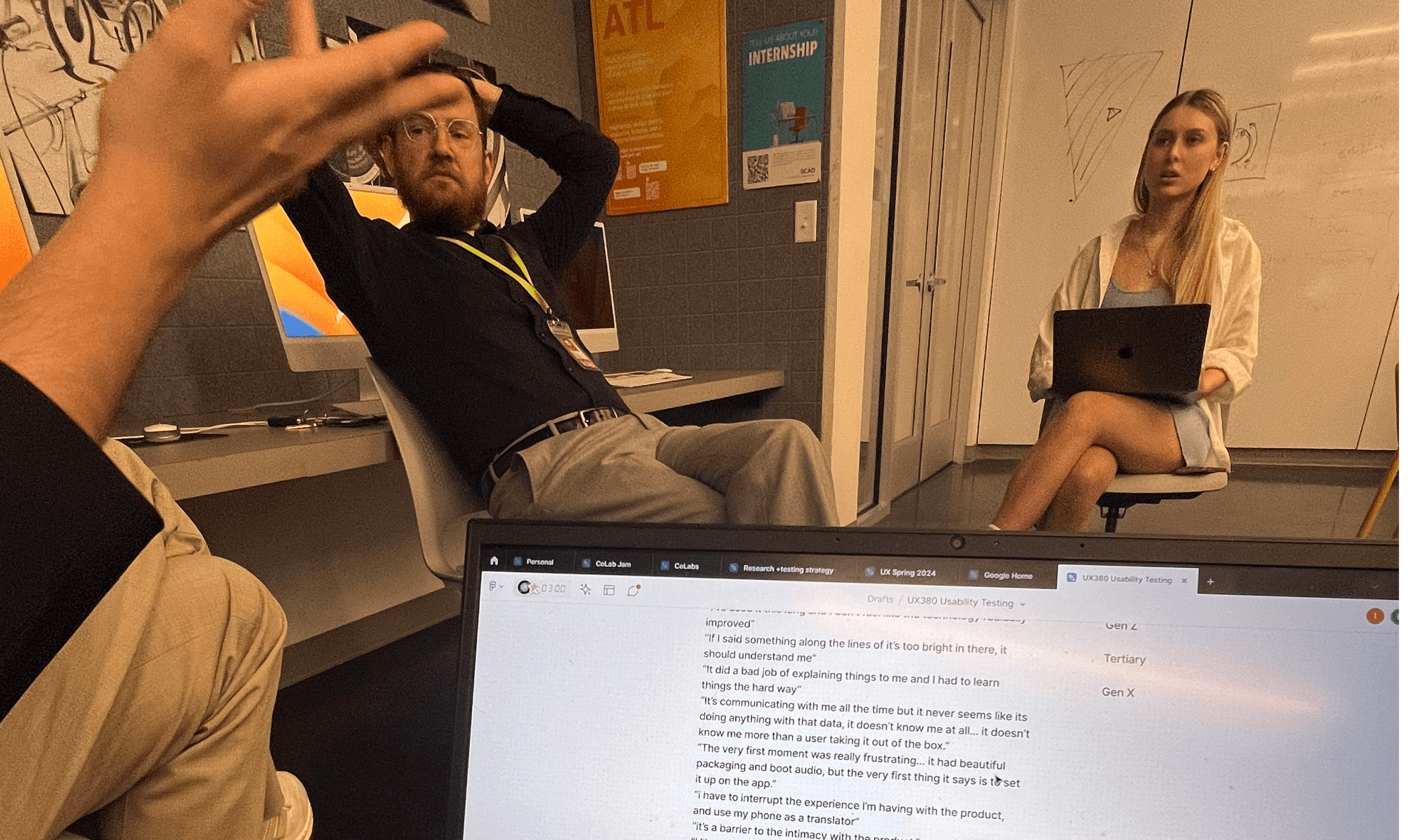

Interviews

While we sent out surveys, we also conducted informal interviews to capture more nuanced experiences with Google Home. These open-dialogue sessions, though not formally structured, allowed us to learn more about users' day-to-day and long-term interactions with the device.

We conducted interviews whenever we could to help further inform our usability testing plans.

Experiment Design

Our usability testing strategy mainly focused on qualitative observations, with analysis objectives based on the 3 problem categories we defined earlier (Onboarding, personalization, and AI assistance). Although we didn’t have the resources to collect large sample sizes, I still thought it was worth collecting quantitative data, as they’re still valid data points.

I came up with our key quantitative metrics so we could help support our findings later on.

Key Metrics

Usability (SUS)

How usable is the Google Home app and Google Home speaker?

Satisfaction (CSAT, Before vs After)

Are users satisfied with their experience setting up, using, and interacting with Google Home?

Time on Task (Expectations vs. Actual)

How faster/slower is it to set up Google Home than users initially think?

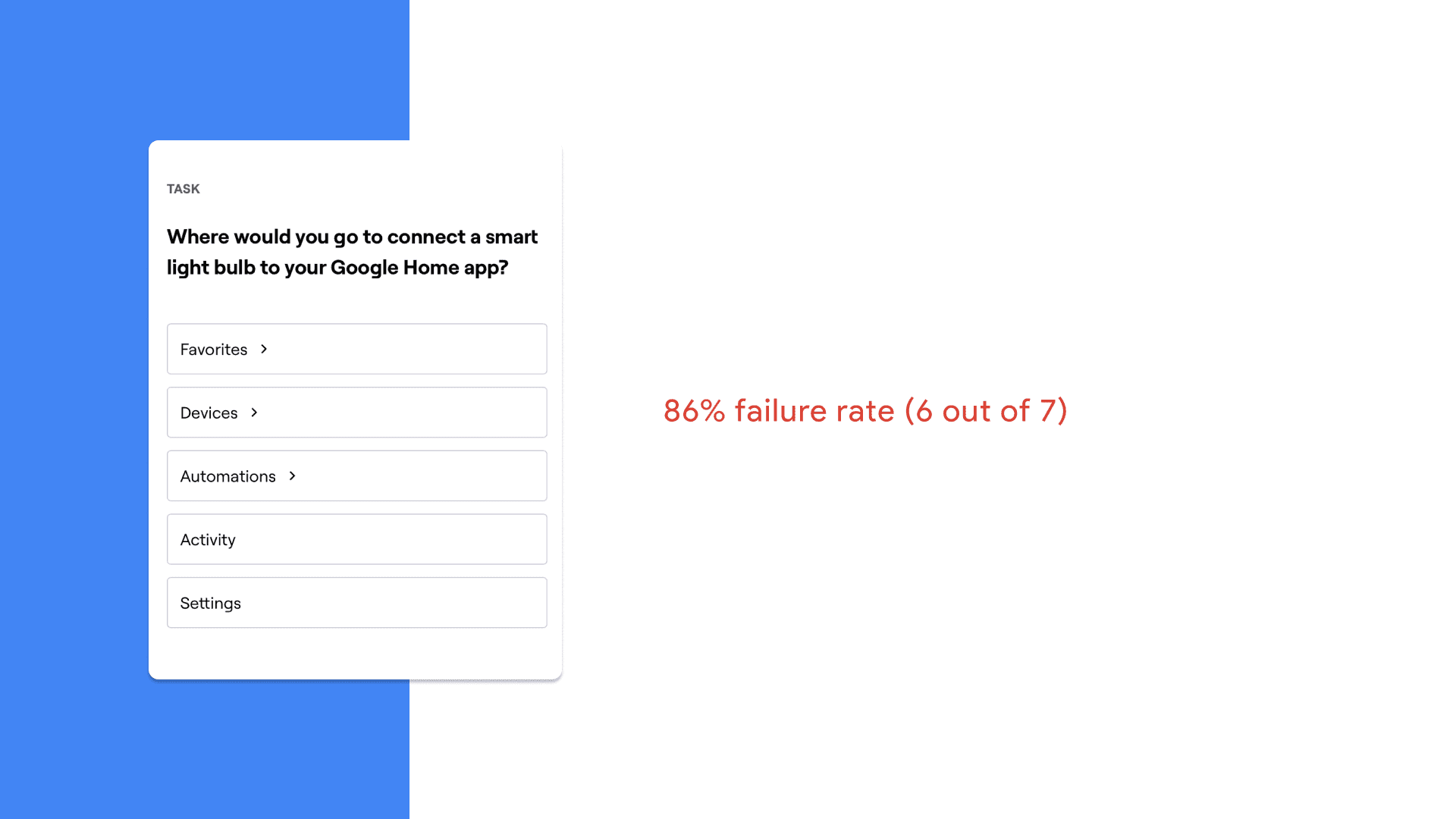

Error + Failure Rates

What tasks do users have trouble accomplishing?

Net Promoter Score (NPS)

How does this experience affect their perception of Google’s brand?

Pilot Testing

I learned that much like design, UX research is highly iterative as well. I conducted multiple pilots to ensure that our actual tests would run smoothly.

These pilot tests helped us identify rabbit holes to avoid, like trying to troubleshoot language setting issues with voice commands, or other scenarios that would take too much time, or anything that wouldn't lead to new insights.

Ensuring Our Tests Counted

We didn't have the leisure of time, or enough screened target participants to run the proper tests we wanted. So in addition to our course, I leveraged my resource network to learn, test, and practice as much as possible to ensure our tests would yield the most useful data we could collect.

As college students with limited resources, we were forced to get creative.

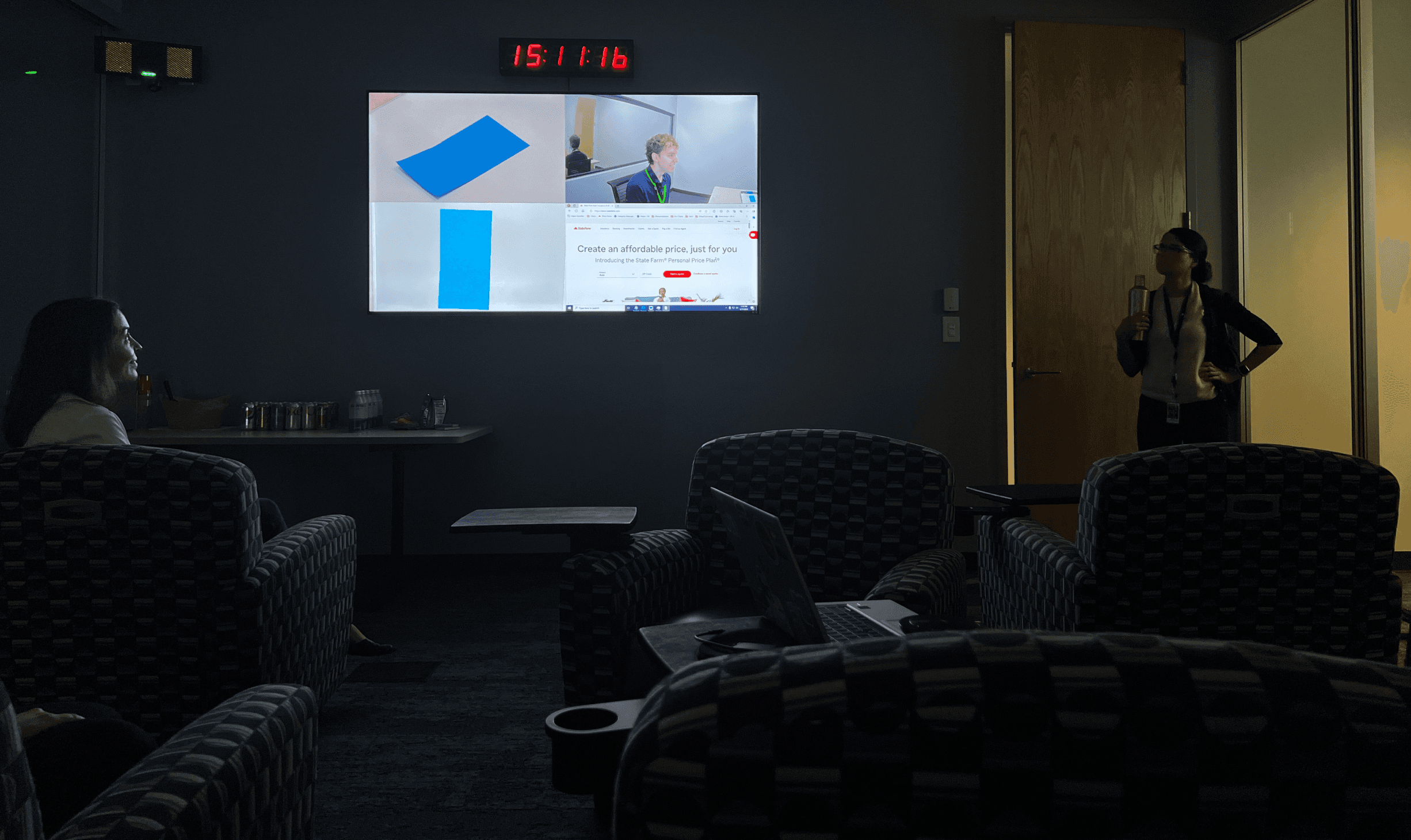

State Farm Usability Testing Lab Visit

To learn more about usability testing practices, I had the opportunity to visit State Farm’s Atlanta office where I met with their dedicated research team and shadowed a mock test.

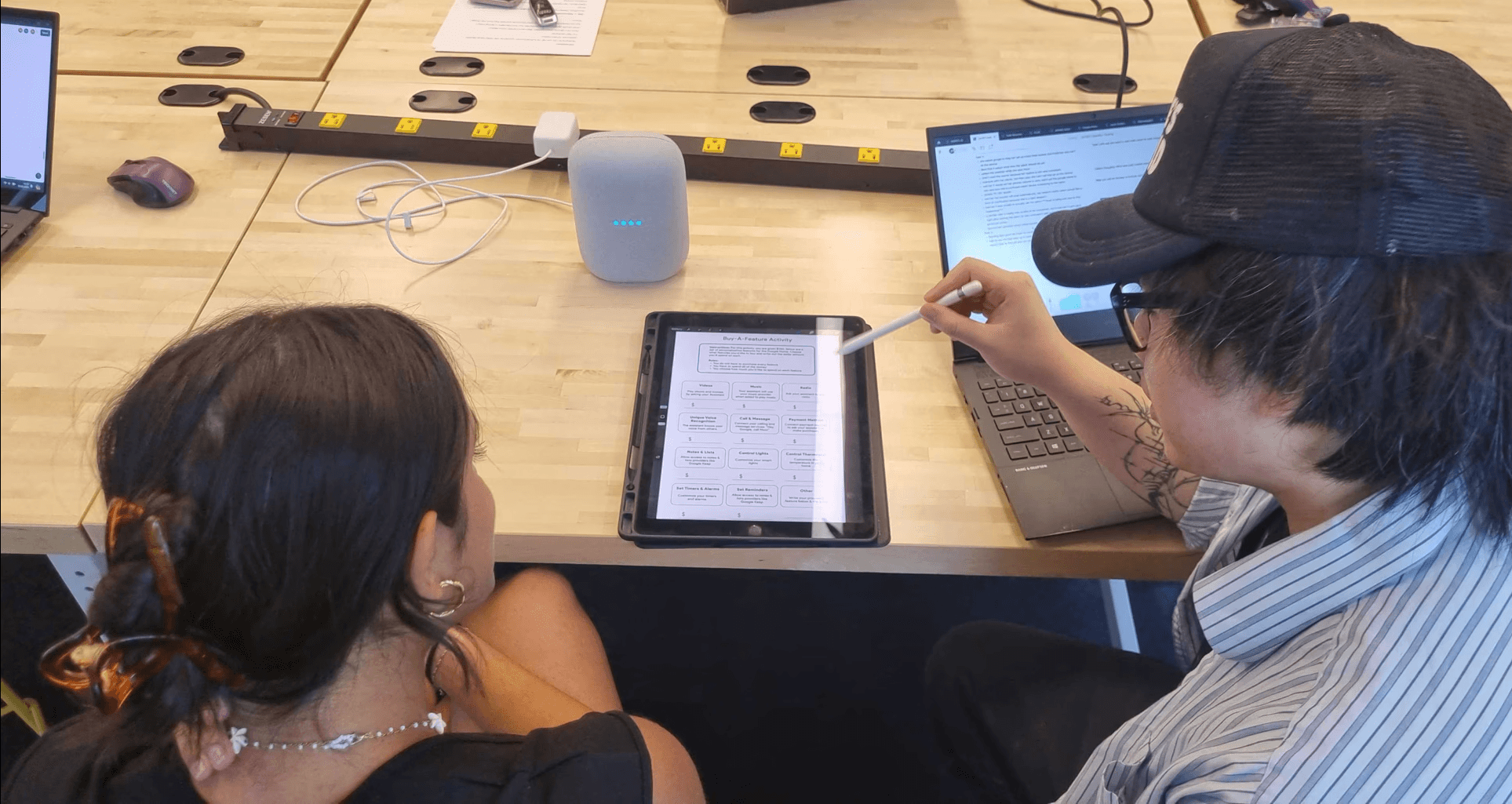

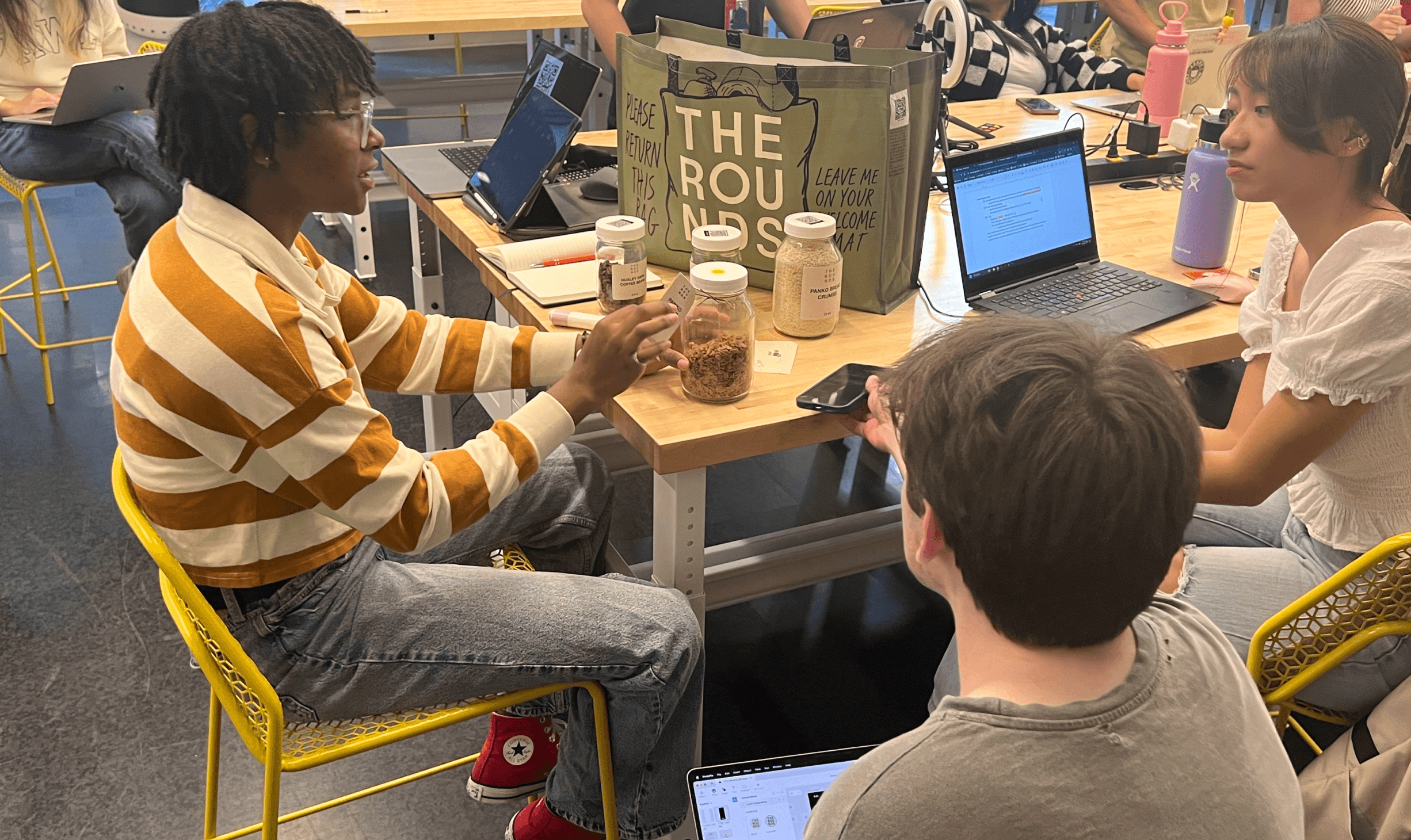

SCAD FLUX ATL User Test Fest

As the student development lead for SCAD’s UX Club, I hosted our annual usability testing fest as an opportunity for 30+ students to gain more data points for their projects. I used this as an opportunity to conduct additional tests, surveys, and interviews.

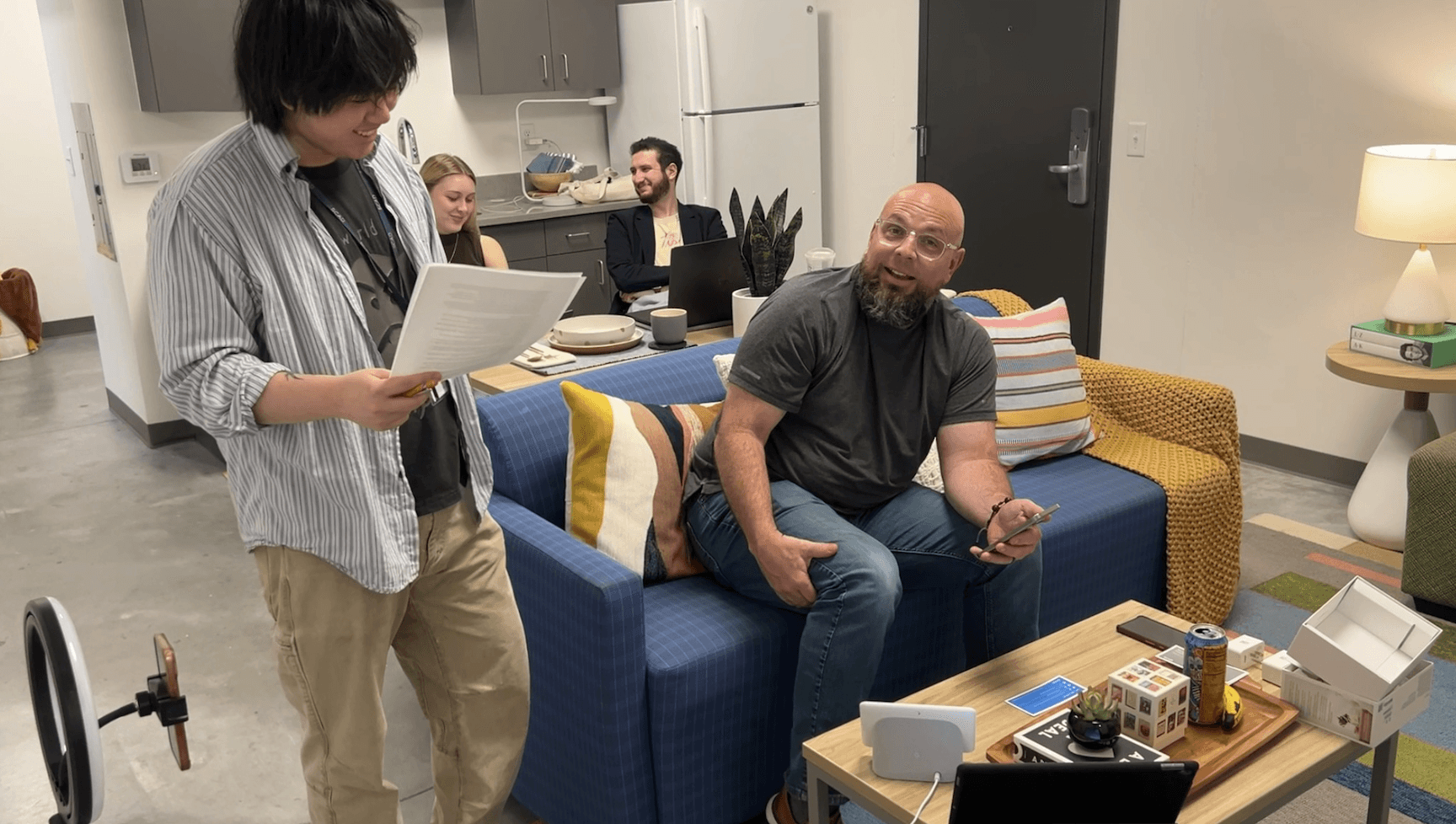

Staging

We initially planned to stage our usability test in our school’s usability testing lab.

I suggested that we instead just use the staged dormitory rooms to mimic a real home or apartment, in an attempt to immerse participants in realistic scenarios.

Work smarter not harder.

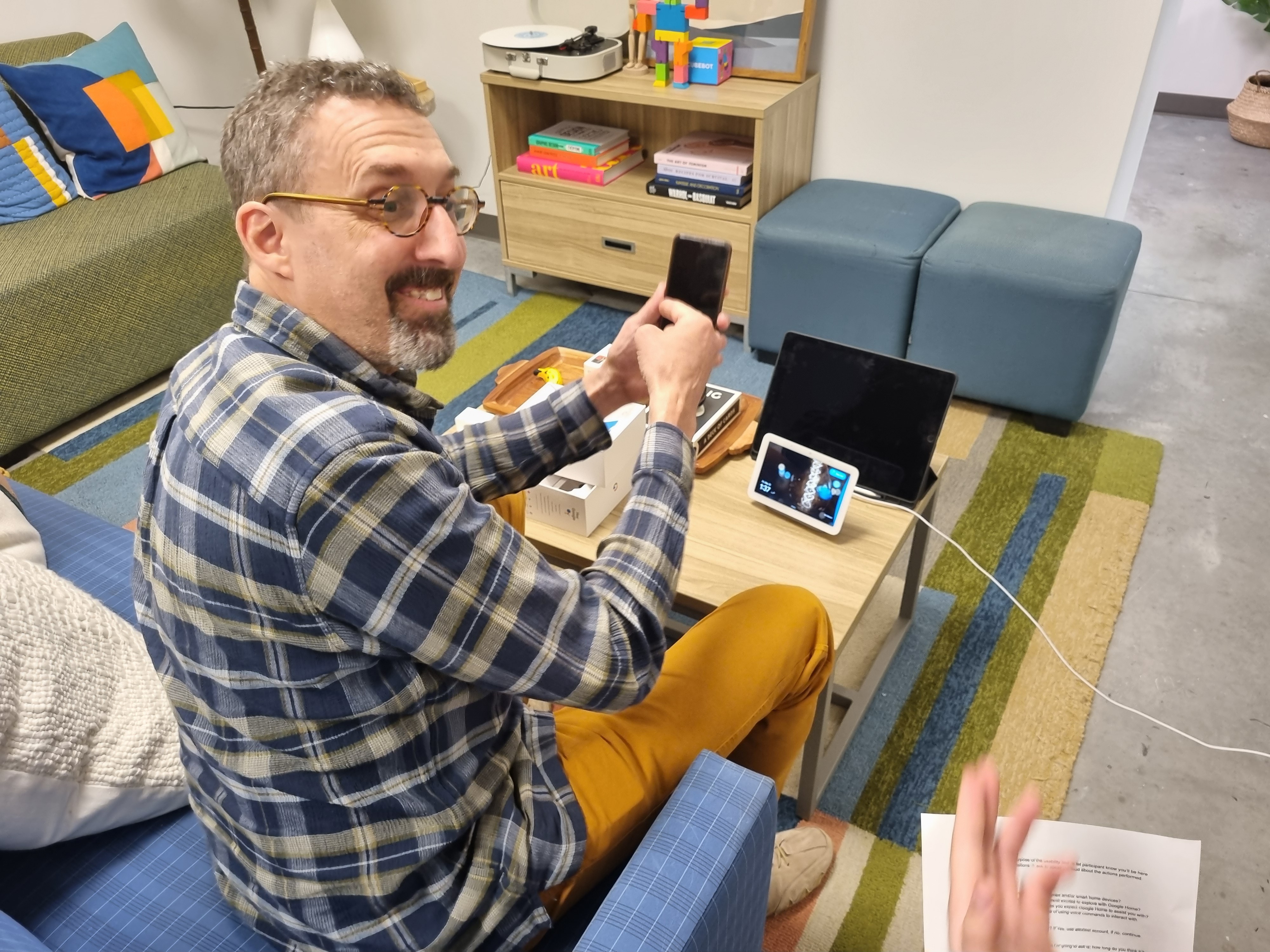

Usability Test Findings

Google Home is quite... bad...

So finally we were ready to perform our usability test. We ran 2.5-hour in-depth usability tests with 3 participants structured by the 3 main analysis objectives we decided on previously (Onboarding, personalization, and AI assistance).

With our target participants, Google Home performed much worse than expected in all categoires.

Multimodal interaction Isn't Always a Good Thing

Google Home speakers rely heavily on using the mobile app to onboard. Even though they supposedly worked together, it never seemed like they talked to eachother. We would never know whether the speaker or their phone would respond to "Hey Google". As we tested a speaker with a screen, having 2 screens would compound participant confusion.

Connecting a speaker: “Should I keep trying?”

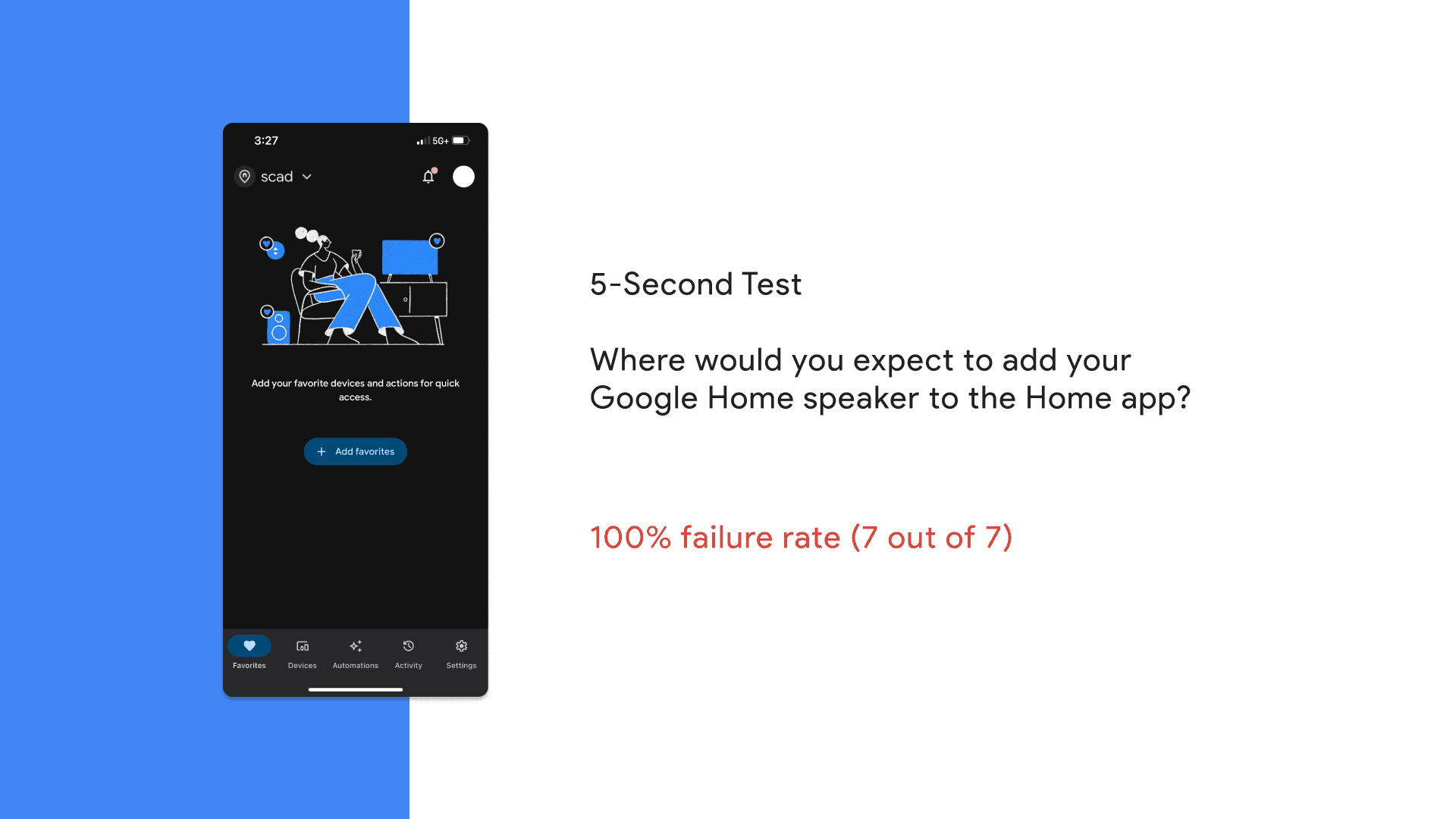

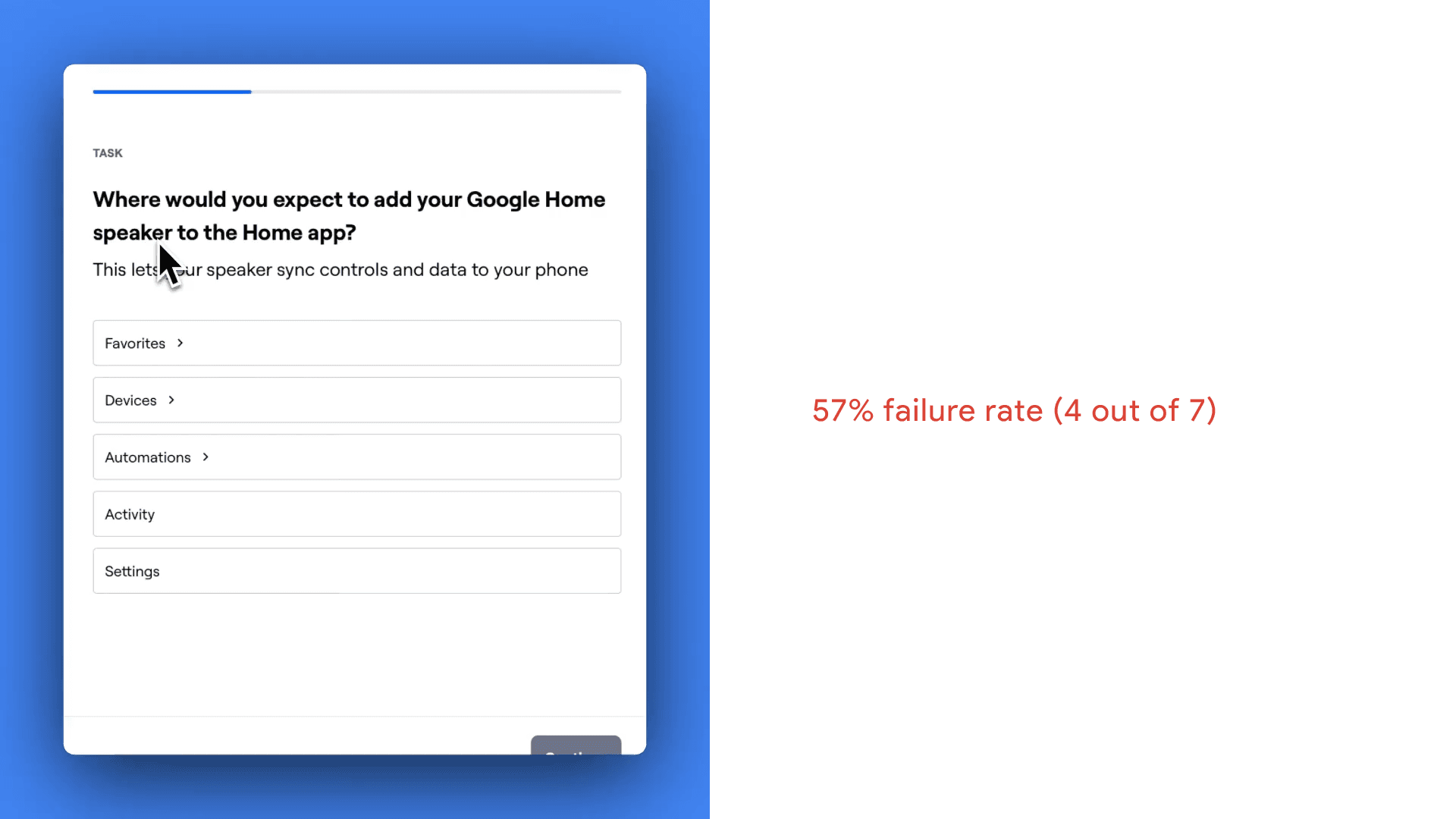

Flaws in Google Home's UX writing and information architecture left participants confused. We observed a 100% failure in one test as the participant gave up, while every other participant made multiple errors before finding where to add a speaker. With extensive menus, it would often take users down the wrong paths for too long.

Nobody could connect a smart bulb/plug.

We budgeted 10 minutes for our smart bulb task. Every participant ended up spending more than double that (~25 minutes) trying before giving up and failing.

Facepalms 🤦 and frustrating moments

Although we observed errors, we had over 7 hours of video footage where we observed negative facial expressions, comments, and outright frustration.

Scenarios with Google Assistant

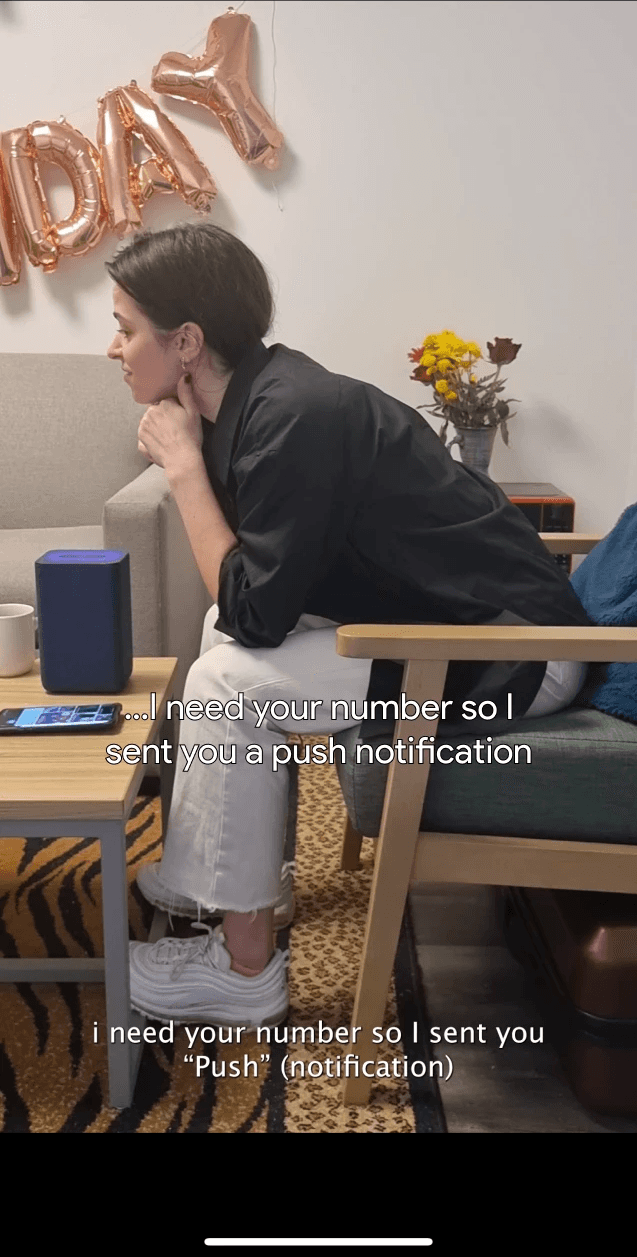

We put participants in hands-free and "eyes-free" scenarios to test Google Assistant's VUI. The responses were almost never helpful, but even if they were remotely helpful, the participant had to abandon their scenario tasks to see results on the screen or phone. What a greaaatttt assistant.

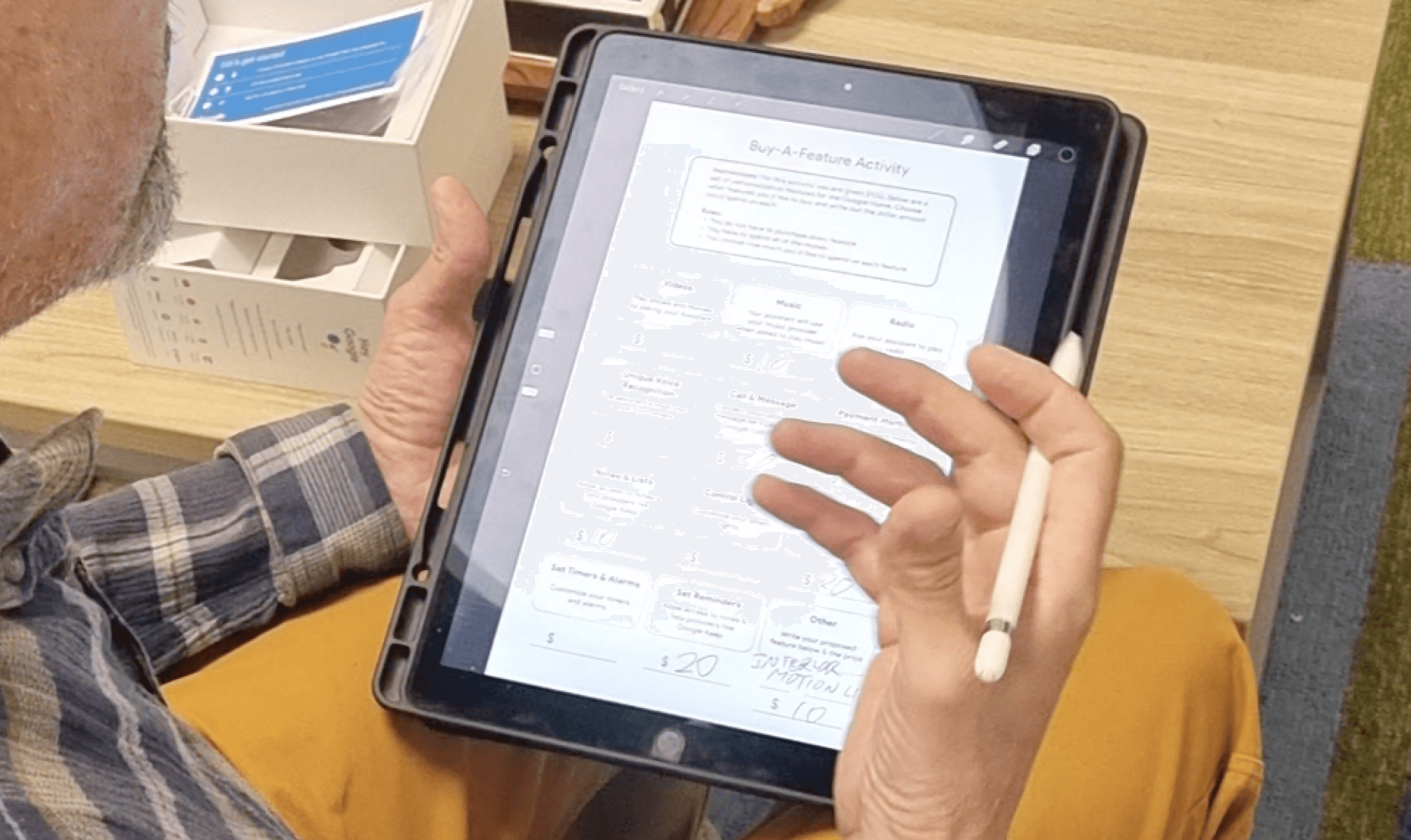

Participatory co-design

At the end of the test, one of the follow-up interview sections I designed was a "Buy-A-Feature" activity, where participants could prioritize their ideal features, as well as suggest their own by assigning dollars out of a $100 budget.

Referencing similar tests

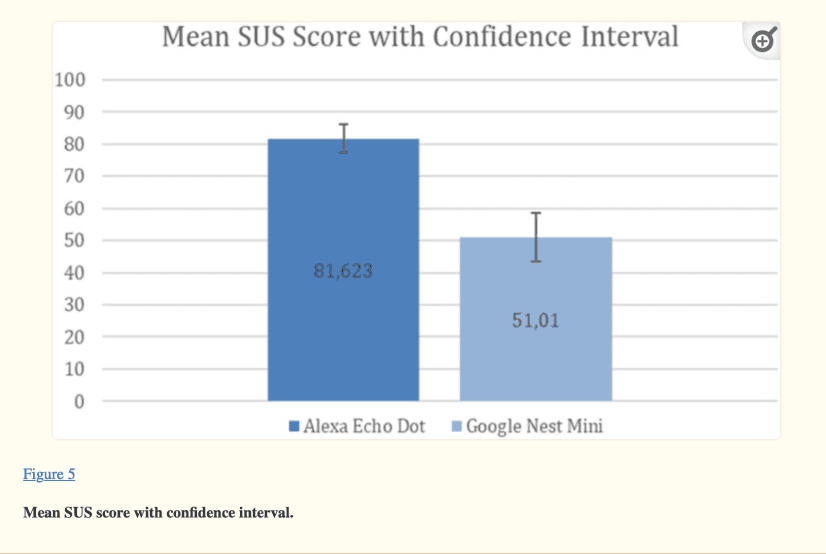

Since we had a small sample size, quantitative data was not my main concern, but they were still valid data points. To make sense out of them, I compared a peer-reviewed academic article that conducted usability testing with 16 participants. This study also measured an F-grade SUS score for Google Home.

Benchmarking Yandex Station

We found Russian-speaking participants to conduct the exact same usability test with Yandex Station/Alice, as we determined that it would likely perform the best out of all of the competitors. Seeing its dramatically better performance set a baseline for where Google Home needed to be.

Design

We verified our processes with Google mentors, who agreed that we successfully identified problems and arrived at insights that made sense.

We identified the problems. Now it was time to answer these problems with a solution that we could test.

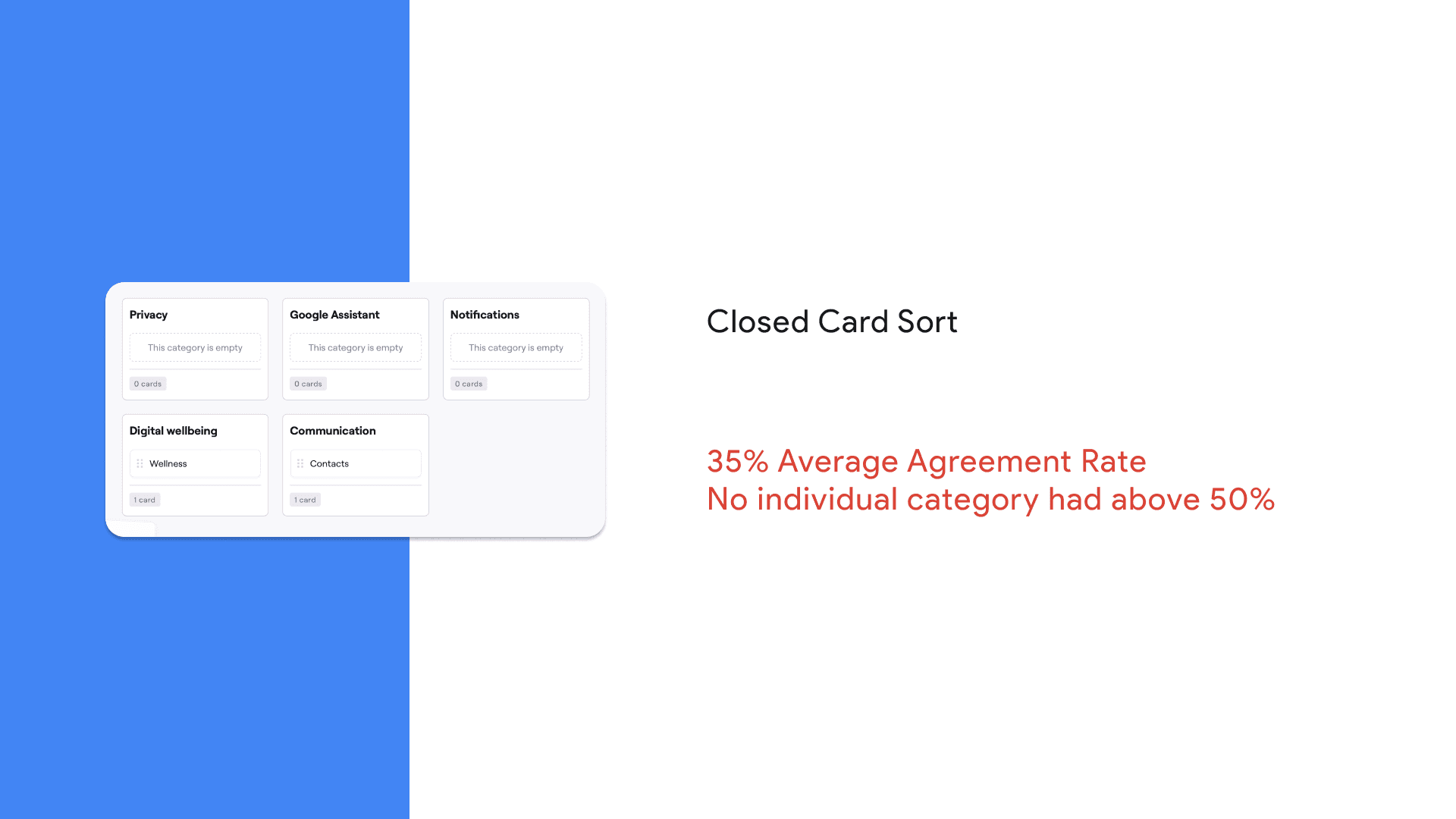

Designing a solution meant diving deeper into specifics like the Google Home app's information architecture. To cover the gaps our usability tests missed, I sent out more unmoderated tests.

App Navigation: More thinking, finding, and troubleshooting.

Cognition

User Thinks

App Launch

User Opens App

UI Interaction

User Navigates Menus

Search & Retrieval

Find Command

Execute Command

Speaker Executes

Voice Command: Less thinking, more doing

Cognition

User Thinks

Voice Recognition

User Speaks Command

NLP

Speaker Interprets

Execute Command

Speaker Executes

If all we did was make a more usable Google Home app, it still would not compete with smarter AI assistants.

Navigating a mobile interface can never be as frictionless as voice-to-action. Google Assistant has been so unreliable that the mobile app became the go-to option. But now, we’ve seen competitors like Yandex Station with significantly sophisticated voice interfaces, proving that AI assistance can truly enhance user interactions, putting them lightyears ahead of Google Home.

Voice is our natural form of communication, and in this way, users won't have to adapt to technology as much.

Defining Ideal Conversations and Behavior

Designing a voice user interface was an entirely new ballpark we were playing in. The solution is not as simple as just throwing AI at the problem. I knew that AI responses had to be intentional and trained to provide the most useful and actionable help.

This new Google Home prototype's VUI was designed based on user preferences, ideal solutions, and industry guidelines.

Understanding what people's "blue-sky" ideas of what an AI assistant would be like.

Leveraging AI with Maze, we were able to probe for people's ideal experiences using follow-up open-ended questions, which saved us time from conducting more interviews in the last sprint of the project.

Creating a System Persona: We gauged users' preferences for how Google Home should seem.

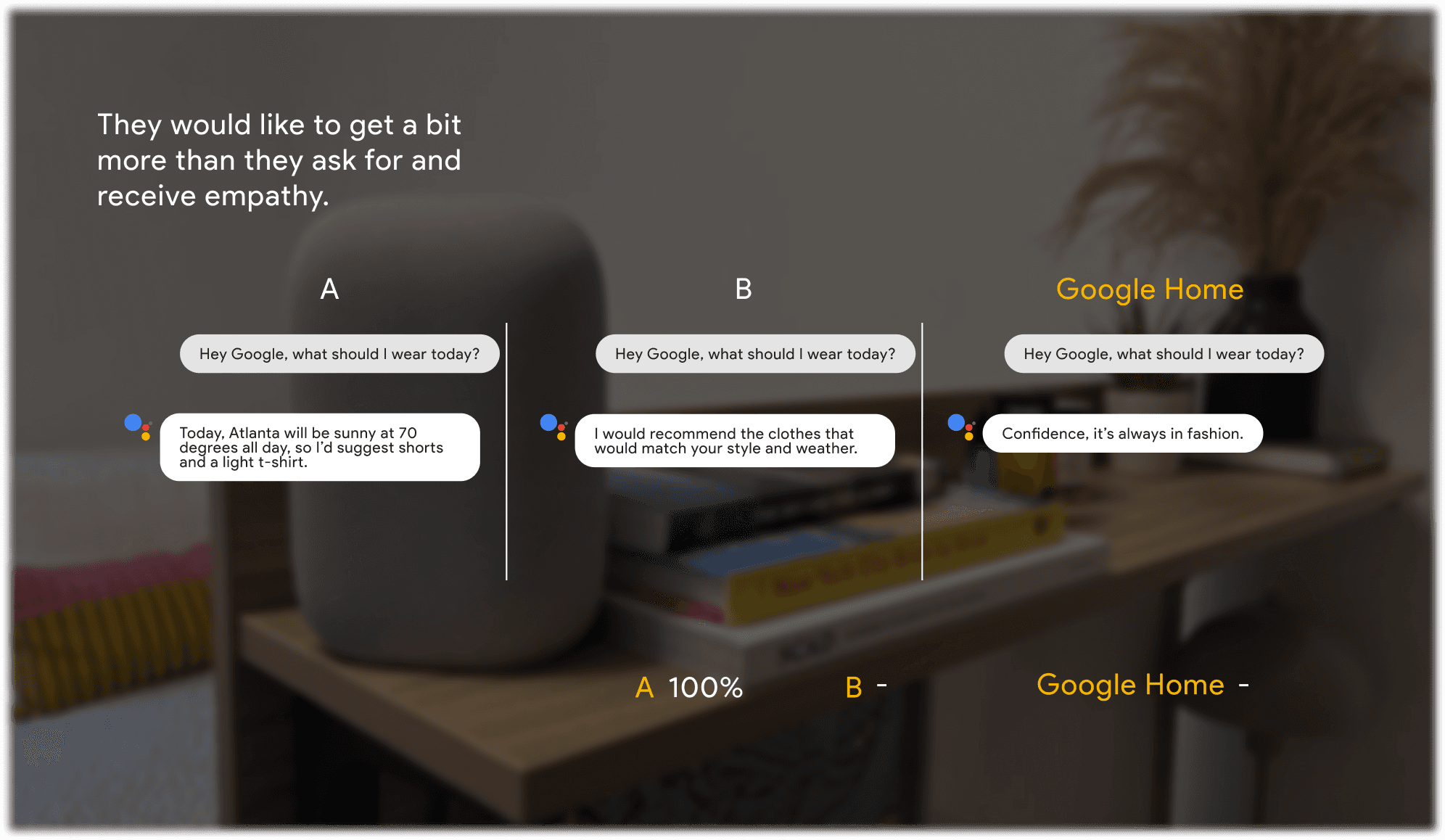

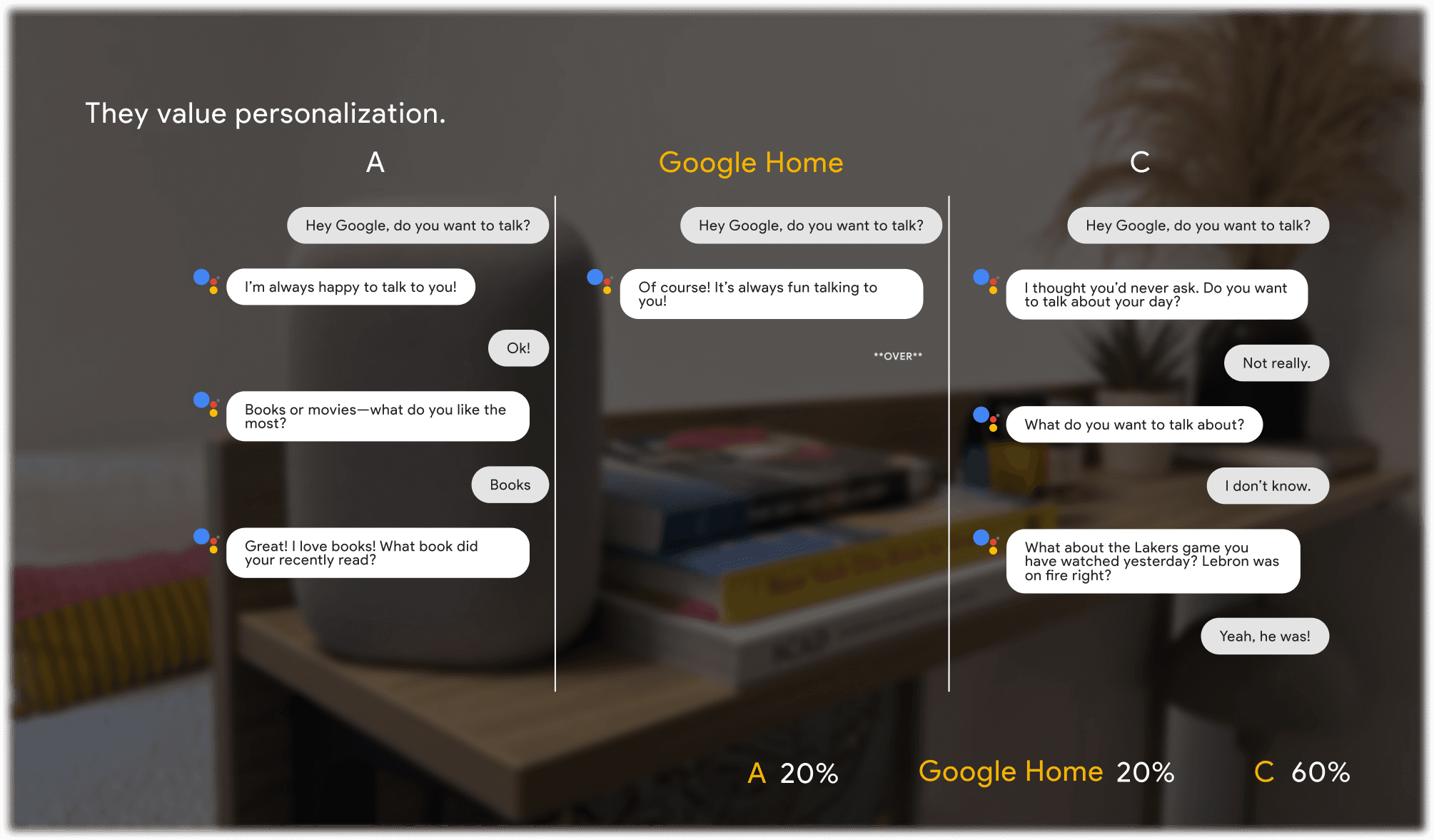

Without telling participants which was which, we showed 3 different responses for the same question, over 8 different scenarios and 10 participants, where we showed Google Home's (Assistant's) current response, Yandex Station's (Alice's), and one that we made up.

VUI Design & Sample Dialogs

We verified our progress and findings with Google, who agreed that we successfully identified problems and arrived at insights that made sense.

Now that we learned more about people's preferences for behavior and functionality for AI assistants, I created sample dialogs as a framework for Gemini at Home.

The Happy Path

For each of Gemini's responses, I made sure they were natural, conversational, and concise by referring to Google's VUI design guidelines, and ensuring they aligned with Google Gemini's brand identity, while also being aligned with our AI persona.

I ensured that the responses provided enough information to keep the conversation engaging and effectively guided the user’s responses without explicitly dictating what they should say.

Use questions

Ensure that questions clearly indicate a response is expected, without explicitly stating what to say. Place the question at the end, as users typically answer right after hearing it.

Provide feedback and provide clear follow-up prompts

Acknowledge the user's response to show it was received and understood.

Use yes/no questions to help structure the user's response.

Reduce cognitive load and let users take their turn

Provide just enough information for the user at the moment and clearly indicate that a response is expected to proceed.

Give the user a chance to think and respond before offering further assistance or moving on.

Sure!

Awesome, I prefer a conversation anyway.

By the way, can you hear me okay?

Yes, I can.

*thinking*

Let’s do the living room.

Great! I’ll be living in the living room!

Next on the list, let's dive in with a few quick questions to get you started! If you're in a hurry, you can just say "skip" at any time and we'll move right along. Are you ready?

Hey look, we’re connected ! I'd love to help you get started. I’m gonna ask you a few quick questions to set up your device. But hey, if you need to, you can always finish the setup on your phone, but I prefer a conversation. Wanna keep talking?

Ok that's what I thought, I just wanted to be sure. I’m not sure which room in your home this device will be in. Could you tell me where you’d like to place it?

Maybe the bedroom, living room, or office? You can also give the room a custom name if you’d like!

Intents/Synonyms

Users often express the same ideas in ways that aren't scripted for.

So to make the prototype more robust, I came up with synonyms for each expected input. This way, no matter how they say it, Gemini is ready to respond naturally.

Leveraging an LLM allows for a more frictionless experience, where the computer can now better interpret the intents of users in the way they are most comfortable or used to talking.

User wants to keep talking

Sure

Yes

I prefer a conversation too

Let’s keep talking

User wants to increase volume

No, you’re too quiet

Can you speak up?

Louder

Turn volume higher

User makes room selection

Living room

The second one

User is ready

Yes

I’m ready

Ok!

Sure!

Awesome, I prefer a conversation anyway.

By the way, can you hear me okay?

Yes, I can.

*thinking*

Let’s do the living room.

Hey look, we’re connected ! I'd love to help you get started. I’m gonna ask you a few quick questions to set up your device. But hey, if you need to, you can always finish the setup on your phone, but I prefer a conversation. Wanna keep talking?

Ok that's what I thought, I just wanted to be sure. I’m not sure which room in your home this device will be in. Could you tell me where you’d like to place it?

Maybe the bedroom, living room, or office? You can also give the room a custom name if you’d like!

Great! I’ll be living in the living room!

Next on the list, let's dive in with a few quick questions to get you started! If you're in a hurry, you can just say "skip" at any time and we'll move right along. Are you ready?

Digression/Combo-breakers

I also anticipated that a user might go completely off-script, replying with questions or unexpected options. To keep the conversation flowing, I listed potential digression paths. This way, Gemini can switch context when needed and maintain a smooth interaction. But in this case, I wanted to redirect them back to the main onboarding task.

Gemini greatly allows for transitions between different threads of conversation, ensuring that it will adapt to the user's wants and needs at any given time.

Alternative Responses

No thanks

I wanna use my phone

Can I use the app?

Can we do this later?

Alternative Responses

Yes, but can we skip to connecting to Spotify?

Alternative Responses

I’m confused

The man cave

Patio

I’m not sure yet

I’m in a hotel right now

Sure!

Awesome, I prefer a conversation anyway.

By the way, can you hear me okay?

Yes, I can.

*thinking*

Let’s do the living room.

Hey look, we’re connected ! I'd love to help you get started. I’m gonna ask you a few quick questions to set up your device. But hey, if you need to, you can always finish the setup on your phone, but I prefer a conversation. Wanna keep talking?

Ok that's what I thought, I just wanted to be sure. I’m not sure which room in your home this device will be in. Could you tell me where you’d like to place it?

Maybe the bedroom, living room, or office? You can also give the room a custom name if you’d like!

Great! I’ll be living in the living room!

Next on the list, let's dive in with a few quick questions to get you started! If you're in a hurry, you can just say "skip" at any time and we'll move right along. Are you ready?

VUI Prototyping

During the final sprint of the project, we were juggling final course deliverables with trying to squeeze in pilot tests and a final usability test that mirrored the original. All of these were not budgeted into our course syllabus, so I knew I had to act fast.

To know if my solution was actually better than the original Google Home, we tested it.

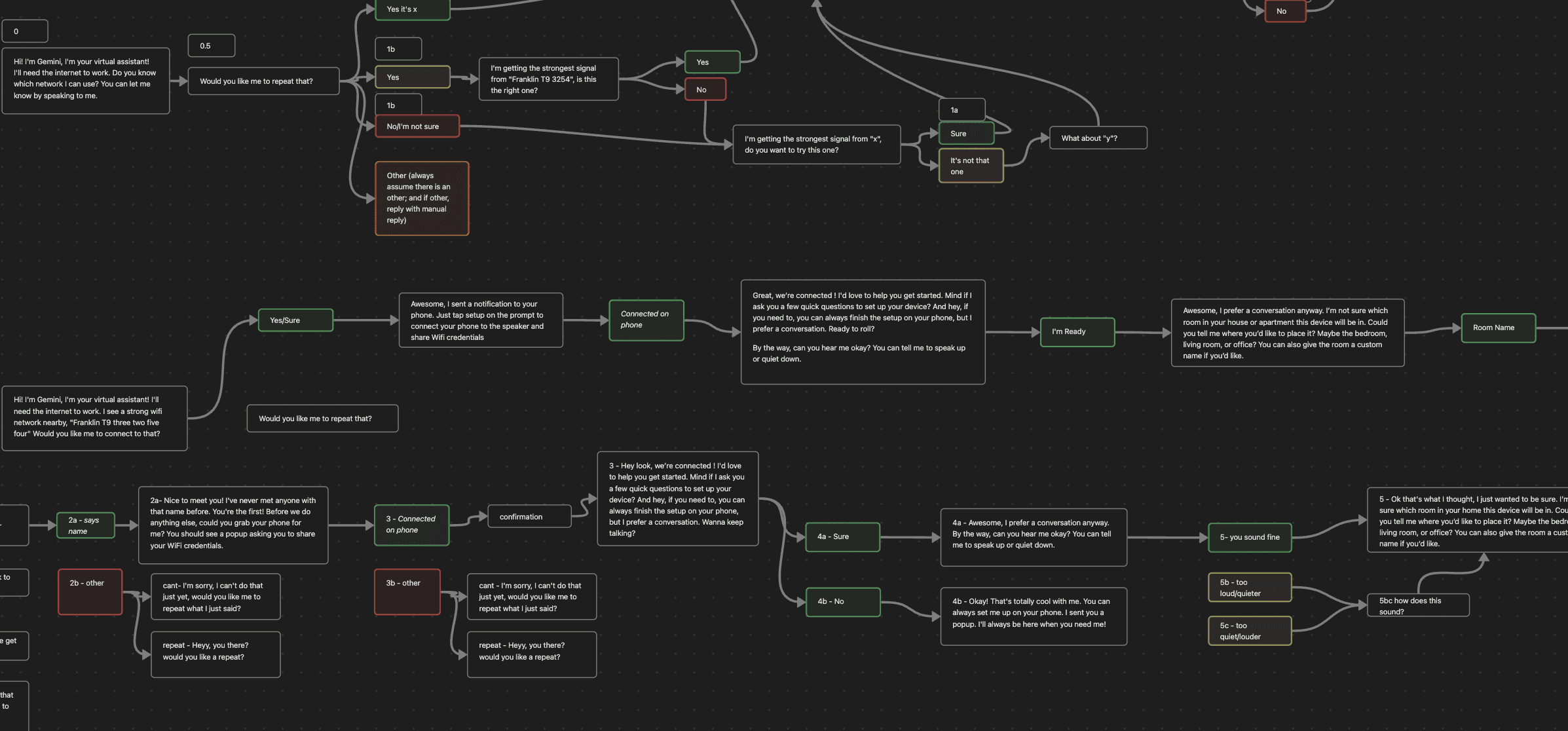

User Flows + Table Reading

Based on the sample dialogs I created, I came up with responses to the many things a participant might say during the user journey. It was easier to anticipate these as we've already ran through the same test multiple times.

I also tested this with my group as a "role-play" to see what participants might say that might leave us without a good response.

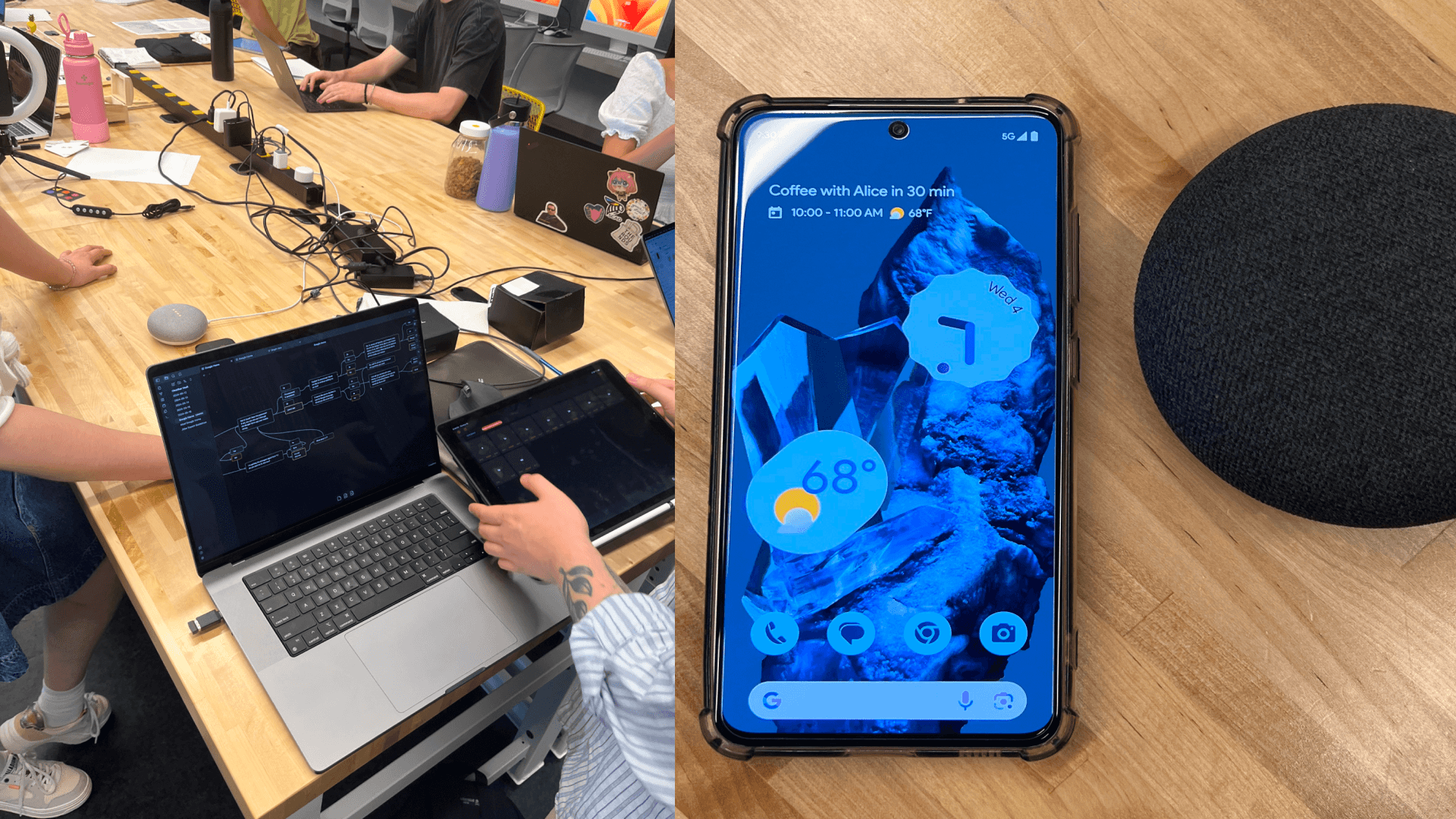

Creating a Prototype to Test

Although I could have used Google's Dialogflow, Speechify, or other voice prototyping tools, I needed something cheap, quick, and easy that could be tested as soon as possible.

There wasn't much time left for experimenting, so I went with what I knew I could make work.

Although scrappy, I just took apart a Google Home and put a mini speaker inside of it.

Simulating a new Google Home

2 days later, it was ready for our 'wizard of oz' test. I linked up responses onto a soundboard connected to the Google Home speaker. I also remote controlled a Figma phone prototype for when the speaker needed to automatically interact with the phone.

What I Learned

Over these 9 weeks, I pushed our team beyond the deliverables to produce something impactful. This entailed challenging myself and others to learn new skills. You only get better through experience, so here are my takeaways.

Reflections

Over-document my documentation.

I knew that as a designer, I'll always need to reference interviews, surveys, etc. Even though I've used transcription tools in the past, for some reason I didn't use them for the hours of footage we recorded (silly me). Sorting through videos to find key quotes and interactions was a nightmare.

Don't ask, just go. (Within reason)

I think one thing that separates a senior designer from a junior is strategy. Throughout this project, I constantly asked "why" for every task and decision we made. I found that where I struggled was in hesitating on my decisions and waiting for discussions with my professor or mentors. Being able to make informed decisions as a team quickly pushed us along, but we definitely could have accomplished more by being less afraid to make mistakes.

Know your team's strengths and weaknesses

Although there were no defined roles. I naturally took on more of a leadership role to help our team's productivity. As I wanted to push for more ambitious outcomes than originally planned, the biggest challenge was aligning the team with my personal goals for the project.

Impact

What our final tests showed

Although this was a course project, I saw no reason to not create an impactful outcome. Here are the results.

UX Metrics

100%

Increase in SUS score

My prototype doubled Google Home's system usability score from 40.0 to 80.0 (F to A)

80%

Increase in CSAT score

Users were dramatically more satisfied with onboarding and AI assistance.

50%

Decrease in onboarding time discrepancy (expectations vs. actual)

Average onboarding time was significantly reduced from around 19 minutes to less than 10 minutes, although the user had the option to skip it entirely if they wanted.

Bonus: This metric may not even be relevant anymore, as users expressed that they felt the new onboarding experience was enjoyable and they didn't mind it.

0

No errors or failures

My prototype's VUI performed much better than Google Home's GUI such that users never had any navigation or troubleshooting issues.

167%

Increase in NPS (Net Promoter Score)

Previously, all test participants would not recommend the Google Home (-100 NPS/Detractors). With the new prototype, 1 participant gave it an 8 (Passive), while the other 2 gave it a 9 and 10 (Promoters).

Sure, we had a small sample size, so some of these numbers might be overblown.

But what can't be disputed

is human emotion:

✦

✦

Contact Me

Currently based in Atlanta, GA

but I love moving around.

You made it this far, so we might as well talk.

I promise it's worth it.

Designed and built by Kevin Esmael Liu, 2024 | No rights reserved—steal whatever you want bc I'll just come up with something better.